When a placement group start backfilling it will ask the OSD be queued for recovery. It will eventually be processed and the OSD will ask it to start the recovery operations. Since it is backfilling (this is the original reason why the recovery operation was queued), it will attempt to reserve a backfill channel ( step 1, step 2 ). When the reservation is successfull it goes back to the initial backfilling state which will re-queue the PG for recovery. When processed, the same function is run but this time the backfill channel is reserved and it starts the backfilling operations. It scans the other OSD to retrieve a list of objects and their associated versions and pushes missing objects to the replicas. Each object pushed is locked for read and ( after trying some snapshot based heuristics ) will register a push and send a CEPH_OSD_OP_PUSH operation to the peer OSD. The receiving replica will handle the message by submitting the payload to a transaction by which the OSD will write it to the file.

Ceph RBD live resize with krbd

The Ubuntu precise 3.8 linux kernel is rebuilt with Laurent Barbe’s patch:

apt-get source linux-image-3.8.0-27-generic cd linux-lts-raring-3.8.0/ curl https://git.kernel.org/cgit/linux/kernel/git/stable/linux-stable.git/patch/?id=d98df63ea7e87d5df4dce0cece0210e2a777ac00 | patch -p1 dpkg-buildpackage -uc -us

and installed with

dpkg -i ../linux-image-3.8.0-27-generic_3.8.0-27.40~precise3_amd64.deb

Live resize of a mounted ext4 file system can then be done as follows:

# rbd create --size 10000 test # rbd map test # mkfs.ext4 -q /dev/rbd1 # mount /dev/rbd1 /mnt # df -h /mnt Filesystem Size Used Avail Use% Mounted on /dev/rbd1 9.5G 22M 9.0G 1% /mnt # blockdev --getsize64 /dev/rbd1 10485760000 # rbd resize --size 20000 test Resizing image: 100% complete...done. # blockdev --getsize64 /dev/rbd1 20971520000 # resize2fs /dev/rbd1 resize2fs 1.42 (29-Nov-2011) Filesystem at /dev/rbd1 is mounted on /mnt; on-line resizing required old_desc_blocks = 1, new_desc_blocks = 2 The filesystem on /dev/rbd1 is now 5120000 blocks long. # df -h /mnt Filesystem Size Used Avail Use% Mounted on /dev/rbd1 20G 27M 19G 1% /mnt

Ceph release name : Kraken

A few steps away from Galerie Deborah Zafman, after turning rue des gravilliers in Paris, France

I looked up and saw a the painting of a Kraken and thought it could be the name of a Ceph release in 2015, six release cycles after Emperor.

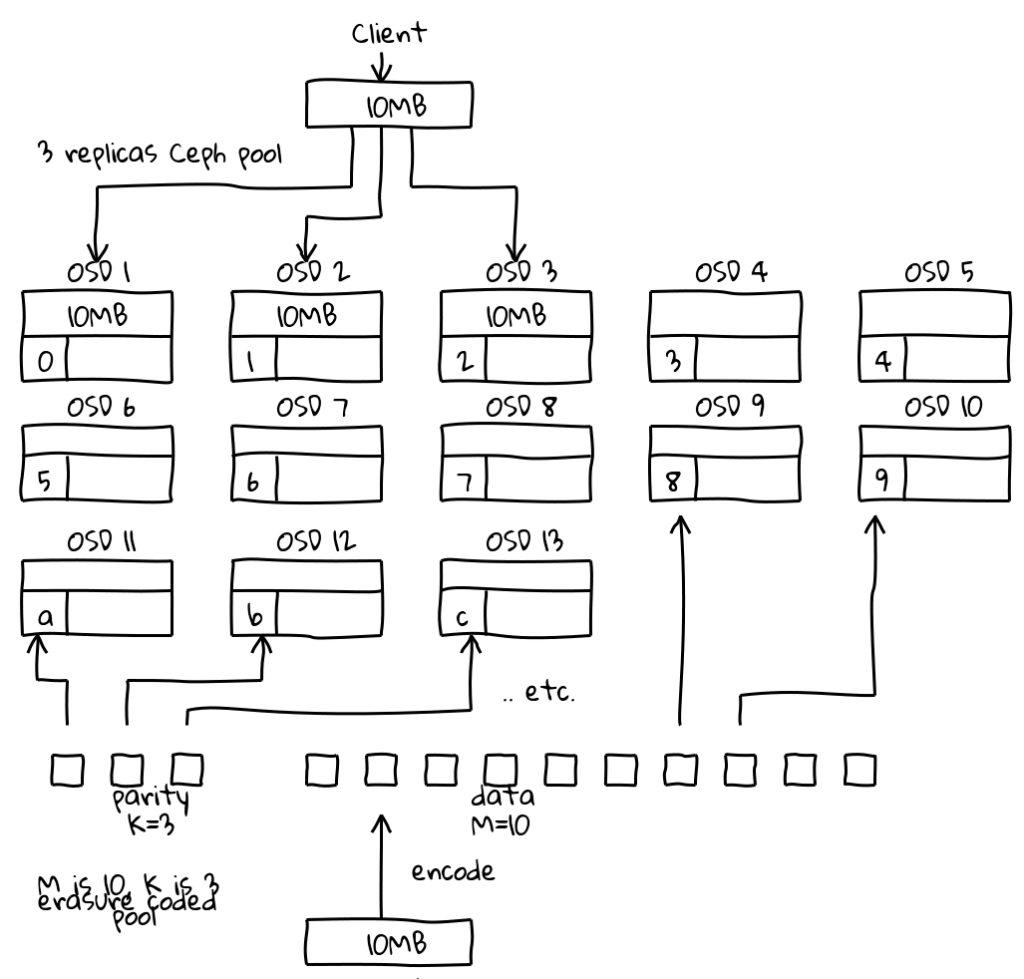

Ceph replication vs erasure coding

Ceph implements resilience thru replication. An erasure coded backend is being worked on. The following diagram compares the two and is hopefully somewhat self explanatory. It was created in the context of the the Ceph BOF at OSCON and is available in ASCII as well as images generated from Ditaa and Shaky. A two minute presentation is derived from it to explain how to read the drawing.

Continue reading “Ceph replication vs erasure coding”

Threads and unit tests in Ceph

To assert that a tested method calls Cond::Wait it is run in a separate Thread. The calling googletest function uses the same Mutex to assert that the child thread is waiting as expected.

For instance, the SharedPtrRegistry::lookup method will Cond::Wait if an entry is being deleted by another thread. It sets the in_method data member to LOOKUP immediately after acquiring the lock and will set it to UNDEFINED before returning. To avoid blocking the main thread, it is called from a child thread created from the test in the main thread. The test relies on the wait_for method to acquire the same lock and check that the in_method has the expected value. It will loop with and wait increasingly longer (up to a maximum) until the condition is met.

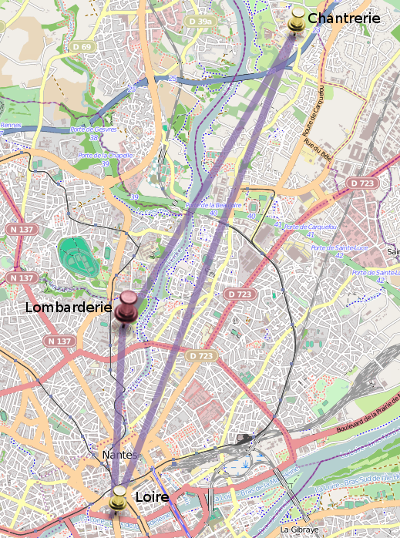

Ceph early adopter : Université de Nantes

The Université de Nantes started using Ceph for backups early 2012, before the Bobtail was released or Inktank founded. The IRTS department, under the lead of Yann Dupont, created a twelve nodes Ceph cluster to store backups. It contains the data generated by 35,000 students and 4,500 employees totaling 100 millions inodes and 25TB of data (out of 40TB). The hardware is spread accross three geographical locations ( Loire, Chantrerie and Lombarderie ) and Ceph is configured to keep working transparently even when one of them is down. The backup pool has two replicas and the crushmap states that each must be stored in a different geographical location. For instance, when Lombarderie is unreachable, which happened this week because of a planned power outage combined with an unplanned UPS failure, Ceph keeps serving the objects from the replicas located in Loire and Chantrerie.

Continue reading “Ceph early adopter : Université de Nantes”

Anatomy of ObjectContext, the Ceph in core representation of an object

An ObjectContext is created when a ReplicatedPG applies operations on an object.

read/write mutual exclusion

The C_OSD_OndiskWriteUnlock callback is registered to be called after a transaction (read in this case) completes. It will signal the writes and reads waiting if all writes are done.

Before adding an entry that will write an object to a transaction ( for instance when ReplicatedPG::mark_object_lost sets the object_info_t::lost data member to true ) the ObjectContext::ondisk_write_lock method is called and will Cond::Wait until all reads complete. The caller of mark_object_lost adds the ObjectContext to a list that is used to build the C_OSD_OndiskWriteUnlockList callback that will be called when the transaction completes and call ondisk_write_unlock on each object.

The logic is similar when reverting an object to a prior version, applying a replica operation, or RepliatedPG::handle_pull_response.

ondisk_read_lock is called by ReplicatedPG::do_op and unlocked after calling prepare_transaction. ReplicatedPG::recover_object_replicas and ReplicatedPG::push_backfill_object do the same.

ondisk_write_lock will wait until there are no more read operations waiting ( readers_waiting ) or read being processed ( readers ). ondisk_read_lock will wait for ongoing writes to finish ( unstable_writes ) but will take the lock even if writers are waiting ( writers_waiting ) therefore taking precedence over write.There can be any number of simultaneous write ( unstable_writes > 1 ) as long as there are no ongoing reads ( readers < 1 ). There can be any number of simultaneous readers ( readers > 1 ) as long as there are no ongoing writes ( unstable_writes < 1 ). ondisk_read_unlock will signal waiting writers if there is no more readers ( !readers ). ondisk_write_unlock will signal waiting readers if there is no more writers ( !unstable_writers ).

blocking and blocked_by

ObjectContext has a blocking and a blocked_by data members. When an operation on an object is made of multiple operations, all of them must be about an object by the same name but can be about different versions. If a variation of the object is degraded, it is blocked by the degraded object and the is added to the list blocked by the degraded object.

Before peering the ReplicatedPG::on_change is called and for each object in the waiting_for_degraded_object list it will loop over the objects it is blocking, remove it from the list and unblock it. The same happens whenever an object has been pushed.

How does AccessMode controls read/write processing in Ceph ?

An operation ( read, write etc. ) may be added to the mode.waiting queue if the ReplicatedPG::AccessMode does not allow of it, yet. For instance, if an operation may_write but AccessMode::try_write finds the current state to be RMW_FLUSHING, it will return false and the operation will be added to mode.waiting. However, if it finds that it is IDLE, it will change to RMW and return true

When handling a write message eval_repop is called at the end to figure out if the operation must be sent to other OSDs in the acting set. If mode.is_rmw_mode(), it will call apply_repop(repop); which will create a transaction to write to the ObjectStore and give it a C_OSD_OpApplied callback which will call ReplicatedPG::op_applied when it completes. ReplicatedPG::op_applied will then call mode.write_applied() and if there is no pending write operation it will set wake = true.

The put_object_context is called immediately after mode.write_applied() to release the ObjectContext and it also checks for mode.wake and will requeue the operations that were previously added to the mode.waiting list because mode.state did not allow them to be processed. The put_object_context method is called in many places in ReplicatedPG, each of them is an opportunity to requeue the operations found in mode.waiting

How does a Ceph OSD handle a write message ? (up to Emperor)

When an OSD handles an operation is queued to a PG, it is added to the op_wq work queue ( or to the waiting_for_map list if the queue_op method of PG finds that it must wait for an OSDMap ) and will be dequeued asynchronously. The dequeued operation is processed by the PG::do_request method which calls the the do_osd_ops method because it is a CEPH_MSG_OSD_OP. The do_osd_ops method is called by prepare_transaction via the PG::do_op pure virtual method which is implemented in ReplicatedPG::do_op and called from the aforementionned PG::do_request method.

When done, ReplicatedPG::do_op calls ReplicatedPG::issue_repop which will send the operation to the replicates If all replicates ack’ed the operation, ReplicatedPG::eval_repop method will notify the client.

How do Ceph placement groups use Watch ?

ReplicatedPG::prepare_transaction ( which is called when handling a message ) will call ReplicatedPG::do_osd_op_effects if the message is handled successfully.

do_osd_op_effects iterates over ReplicatedPG::watch_connects which is set with a watcher including a cookie, a timeout ( hard coded to 30 seconds ) and an IP address built with the connection from which the CEPH_OSD_OP_WATCH message was receieved. The watcher is also is added to the object_info_t if not already present.

A Watch is added to the ObjectContext ( i.e. the in core representation of the object ). The Watch::connect method is called and retreives an OSD::Session from theConnection on which the message was received.

The notifications found in in_progress_notifies are handled by send_notify which creates a MWatchNotify message and asks the OSD to send it using the connection referenced by the Watch ( i.e. the conn data member ).

When an object context is created ReplicatedPG::populate_obc_watchers iterates over the watch_info_t that are found in the object_info_t that was loaded from disk and it rebuilds a Watch from them and disconnects it so that it gets a chance to re-establish the connection with the client. When all Watch have been rebuilt, ReplicatedPG::check_blacklisted_obc_watchers is called to loop over the watchers and simulate a timeout if they are associated with a blacklisted entity ( according to the OSD map ).

The watch from all ObjectContext is also checked for blacklisted entities when PG::handle_activate_map is activated.