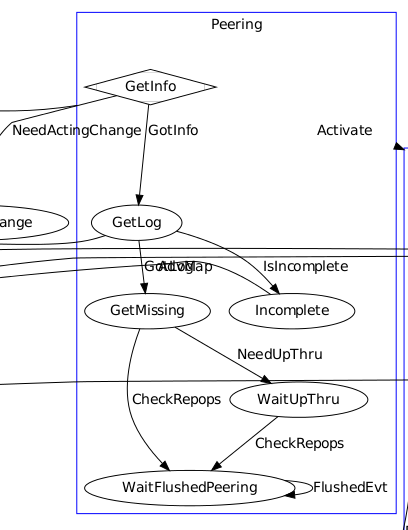

The Peering state machine ( based on the Boost Statechart Library ) is in charge of making sure the OSDs supporting the placement groups are available, as described in the high level description of the peering process. It will then move to the Active state machine which will bring the placement group in a consistent state and handle normal operations.

The placement groups rely on OSDs to exchange informations. For instance when the primary OSD receives a placement group creation message it will forward the information to the placement group who will translate it into events for the newly created state machine

The OSDs also provide a work queue dedicated to peering where events are inserted to be processed asynchronously.

Continue reading “Ceph Placement Groups peering”

GLOCK is my favorite Cloud stack

GLOCK stands for GNU, Linux, OpenStack, Ceph and KVM. GNU is the free Operating System that guarantees my freedom and independance, Linux is versatile enough to accommodate for the heterogeneous hardware I’m using, OpenStack allows me to cooperatively run a IaaS with my friends and the non-profits I volunteer for, Ceph gives eternal life to my data and KVM will be maintained for as long as I live.

Ceph disk requirements will be lower : a new backend is coming

When evaluating Ceph to run a new storage service, the replication factor only matters after the hardware provisionned from the start is almost full. It may happen months after the first user starts to store data. In the meantime a new storage backend ( erasure encoded ) reducing up to 50% of the hardware requirements is being developped in Ceph.

It does not matters to save disk from the beginning : it is not used anyway. The question is to figure out when the erasure encoded will be ready to double the usage value of the storage already in place.

Continue reading “Ceph disk requirements will be lower : a new backend is coming”

Merging Ceph placement group logs

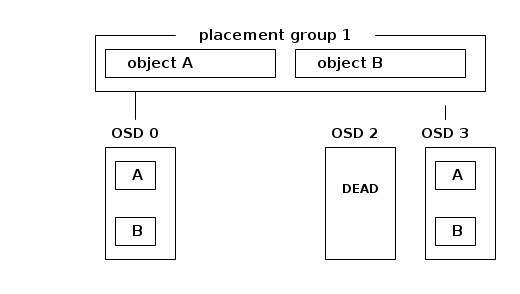

Ceph stores objects in pools which are divided in placement groups. When an object is created, modified or deleted, the placement group logs the operation. If an OSD supporting the placement group is temporarily unavailable, the logs are used for recovery when it comes back.

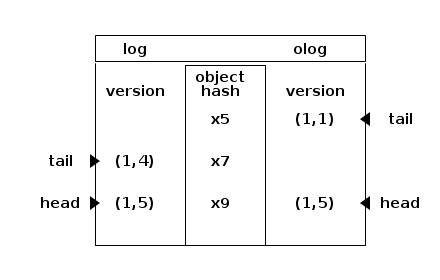

The column to the left shows the log entries. They are ordered, oldest first. For instance : 1,4 is epoch 1 version 4. The tail displayed to the left points to the oldest entry of the log. The head points to the most recent. The column to the right shows the olog : they are authoritative and will be merged into log which may imply to discard divergent entries. All log entries relate to an object which is represented by its hash in the column in the middle : x5, x7, x9.

The use cases implemented by PGLog::merge_log are demonstrated by individual test cases.

Continue reading “Merging Ceph placement group logs”

Using the largest OpenStack tenant to define an architecture that scales out

The service offering of public cloud providers is designed to match many potential customers. It would be impossible to design the underlying architecture ( hardware and software ) to accommodate the casual individual as well as the need of the CERN. No matter how big the cloud provider, there are users for whom it is both more cost effective and efficient to design a private cloud.

The size of the largest user could be used to simplify the architecture and resolve bottlenecks when it scales. The hardware and software are designed to create a production unit that is N times the largest user. For instance, if the largest user requires 1PB of storage, 1,000 virtual machines and 10Gb/s of sustained internet transit, the production unit could be designed to accommodate a maximum of N = 10 users of this size. If the user base grows but the size of the largest user does not change, independent production units are built. All production units have the same size and can be multiplied.

Each production unit is independent from the others and can operate standalone. A user confined in a production unit does not require interactions, directly or indirectly, with other production units. While this is true most of the time, a live migration path must be open temporarily between production units to balance their load. For instance, when the existing production units are too full and a production unit becomes operational, some users are migrated to the new production unit.

Although block and instance live migration are supported within an OpenStack cluster, this architecture would require the ability to live migrate blocks and instances between unrelated OpenStack clusters. In the meantime, cells and aggregates can be used. The user expects this migration to happen transparently when the provider does this behind the scene. But if she/he is willing to change from one OpenStack provider to the other, the same mechanism could eventually be used. Once the user ( that is the tenant in the OpenStack parlance ) is migrated, the credentials can be removed.