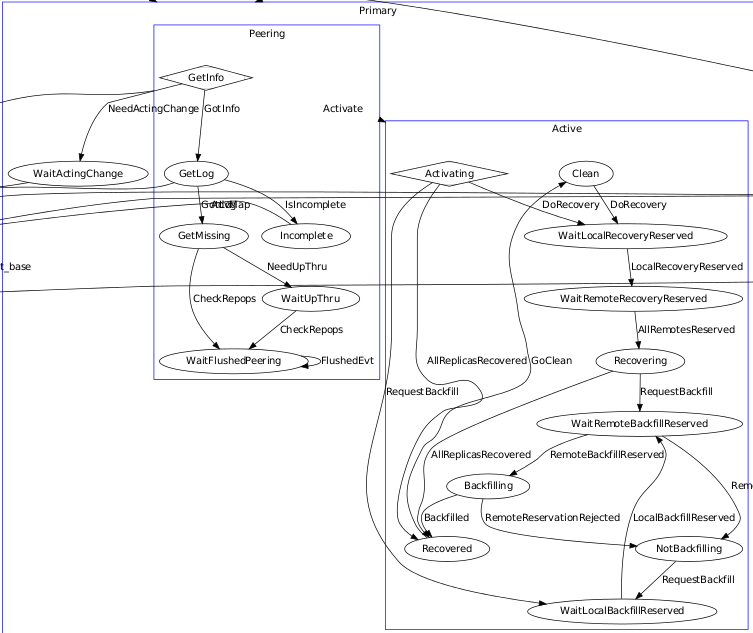

The Peering state machine ( based on the Boost Statechart Library ) is in charge of making sure the OSDs supporting the placement groups are available, as described in the high level description of the peering process. It will then move to the Active state machine which will bring the placement group in a consistent state and handle normal operations.

The placement groups rely on OSDs to exchange informations. For instance when the primary OSD receives a placement group creation message it will forward the information to the placement group who will translate it into events for the newly created state machine

The OSDs also provide a work queue dedicated to peering where events are inserted to be processed asynchronously.

Entering the Peering state machine

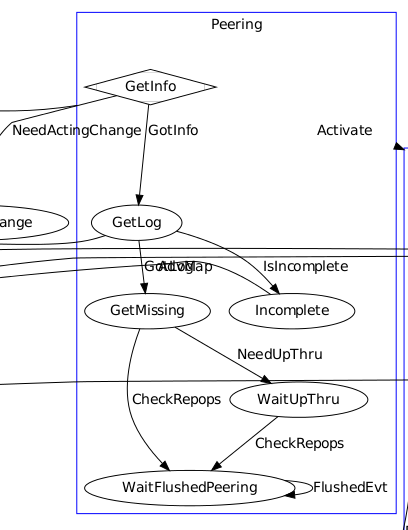

A placement group is in a constant recovery state, reacting to lost OSDs or changes in the crush map. Each OSD participating in the placement group runs the state machine. There will be as many state machines as placement groups. The command dot -Tpdf peering_graph.generated.dot -o peering_graph.generated.pdf ; evince can be used to browse and zoom the following from the dot file generated from sources with cat src/osd/PG.h src/osd/PG.cc | doc/scripts/gen_state_diagram.py > doc/dev/peering_graph.generated.dot.

The primary OSD (i.e. the OSD that will handle the writes for this placement group ) will enter the Primary sub state

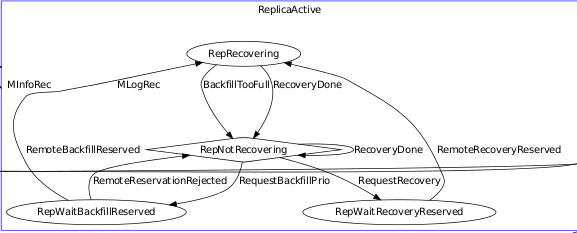

And the replica OSDs will enter the ReplicaActive.

When a placement group is loaded or created, the primary OSD will enter the RecoveryMachine in the Initial state and the calling function will transition wih a Load event if the placement group is loaded from disk after the OSD starts. If the placement group is created it will transition to Reset with the event Initialize and immediately afterwards to Started with the event ActMap. The initial state when entering Started is Start.

When entering the Start state, it will decide to enter the Primary state if running on the primary OSD. The initial state when entering Primary is Peering.

GetInfo

The initial state when entering Peering is GetInfo. It gets the pg_info_t data structure by sending a query to the other OSDs.

When another OSD responds with a MSG_OSD_PG_NOTIFY message, it is translated into a MNotifyRec event and the Peering state machine react and processes it. When all OSDs replied the GotInfo event is sent and will transition to the GetLog state.

GetLog

When entering the GetLog state, if the acting set cannot be determined it will exit the peering state and go back to Started or transition to state Incomplete with the IsIncomplete event which will transition to Reset after receiving the expected AdvMap event.

Otherwise the OSD that has the best logs is asked to send them. When received the GotLog event processes them with pg->proc_master_log and transitions to the GetMissing state.

GetMissing

When entering the GetMissing state it will loop over the OSDs in the acting set and ask for their logs if necessary. When they are all received, it will transition to WaitFlushedPeering. However, if up_thru is needed, it will transition to the WaitUpThru state until it is updated.

WaitFlushedPeering

The WaitFlushedPeering state is re-entered each time a a FlushedEvt event is received. It will eventually post the Activate event that will exit the Peering state into the Active state.