End of last year, a new puppet-ceph module was bootstrapped with the ambitious goal to re-unite the dozens of individual efforts. I’m very happy with what we’ve accomplished. We are making progress although our community is mixed, but more importantly, we do things differently.

Continue reading “puppet-ceph update”

Ceph erasure code jerasure plugin benchmarks (Highbank ARMv7)

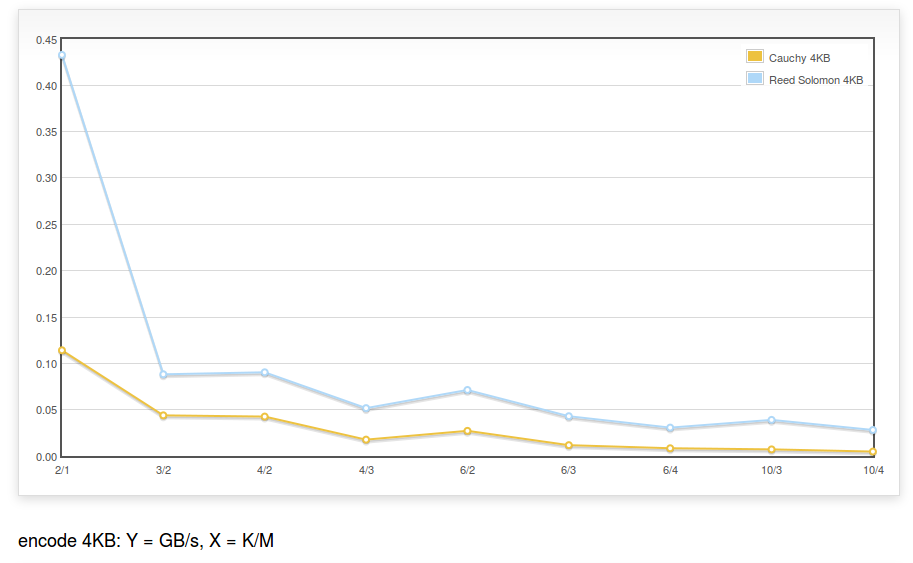

The benchmark described for Intel Xeon is run with a Highbank ARMv7 Processor rev 0 (v7l) processor (the maker of the processor was Calxeda ), using the same codebase:

The encoding speed is ~450MB/s for K=2,M=1 (i.e. a RAID5 equivalent) and ~25MB/s for K=10,M=4.

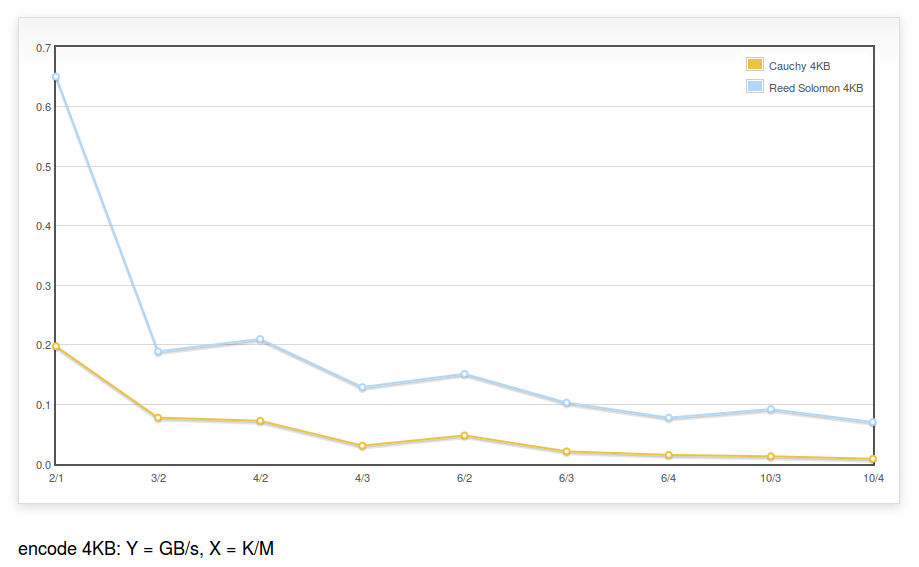

It is also run with Highbank ARMv7 Processor rev 2 (v7l) (note the 2):

The encoding speed is ~650MB/s for K=2,M=1 (i.e. a RAID5 equivalent) and ~75MB/s for K=10,M=4.

Note: The code of the erasure code plugin does not contain any NEON optimizations.

Continue reading “Ceph erasure code jerasure plugin benchmarks (Highbank ARMv7)”

workaround DNSError when running teuthology-suite

Note: this is only useful for people with access to the Ceph lab.

When running a Ceph integration tests using teuthology, it may fail because of a DNS resolution problem with:

$ ./virtualenv/bin/teuthology-suite --base ~/software/ceph/ceph-qa-suite \ --suite upgrade/firefly-x \ --ceph wip-8475 --machine-type plana \ --email loic@dachary.org --dry-run 2014-06-27 INFO:urllib3.connectionpool:Starting new HTTP connection (1): ... requests.exceptions.ConnectionError: HTTPConnectionPool(host='gitbuilder.ceph.com', port=80): Max retries exceeded with url: /kernel-rpm-centos6-x86_64-basic/ref/testing/sha1 (Caused by: [Errno 3] name does not exist)

It may be caused by DNS propagation problems and pointing to the ceph.com may work better. If running bind, adding the following in /etc/bind/named.conf.local will forward all ceph.com related DNS queries to the primary server (NS1.DREAMHOST.COM i.e. 66.33.206.206), assuming /etc/resolv.conf is set to use the local DNS server first:

zone "ceph.com."{

type forward ;

forward only ;

forwarders { 66.33.206.206; } ;

};

zone "ipmi.sepia.ceph.com" {

type forward;

forward only;

forwarders {

10.214.128.4;

10.214.128.5;

};

};

zone "front.sepia.ceph.com" {

type forward;

forward only;

forwarders {

10.214.128.4;

10.214.128.5;

};

};

The front.sepia.ceph.com zone will resolve machine names allocated by teuthology-lock and used as targets such as:

targets: ubuntu@saya001.front.sepia.ceph.com: ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABA ... 8r6pYSxH5b

Locally repairable codes and implied parity

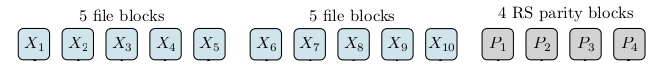

When a Ceph OSD is lost in an erasure coded pool, it can be recovered using the others.

For instance if OSD X3 was lost, OSDs X1, X2, X4 to X10 and P1 to P4 are retrieved by the primary OSD and the erasure code plugin uses them to rebuild the content of X3.

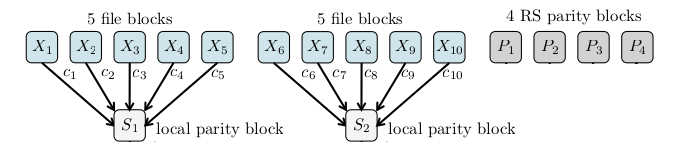

Locally repairable codes are designed to lower the bandwidth requirements when recovering from the loss of a single OSD. A local parity block is calculated for each five blocks : S1 and S2. When the X3 OSD is lost, instead of retrieving blocks from 13 OSDs, it is enough to retrieve X1, X2, X4, X5 and S1, that is 5 OSDs.

In some cases, local parity blocks can help recover from the loss of more blocks than any individual encoding function can. In the example above, let say five blocks are lost: X1, X2, X3, X4 and X8. The block X8 can be recovered from X6, X7, X9, X10 and S2. Now that only four blocks are missing, the initial parity blocks are enough to recover. The combined effect of local parity blocks and the global parity blocks acts as if there was implied parity block.