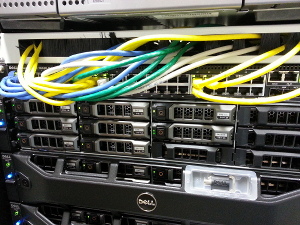

The UMR de Génétique Végétale is a state funded french research facility located in a historical monument: a large farm surrounded by fields harvested for experiments. Johann Joets was assigned a most unusual mission : setup a state of the art datacenter in a former pigsty. His colleague Olivier Langella does the system administration for PAPPSO and researched an extendable storage solution able to transparently sustain the loss of any hardware component. A simple Ceph setup was chosen and is in use since 2012.

Continue reading “Ceph use case : proteomic analysis”

Ceph dialog : PGBackend

This is a dialog where Samuel Just, Ceph core developer answers Loïc Dachary’s questions to explain the details of PGBackend (i.e. placement group backend). It is a key componenent of the implementation of erasure coded pools.

Continue reading “Ceph dialog : PGBackend”

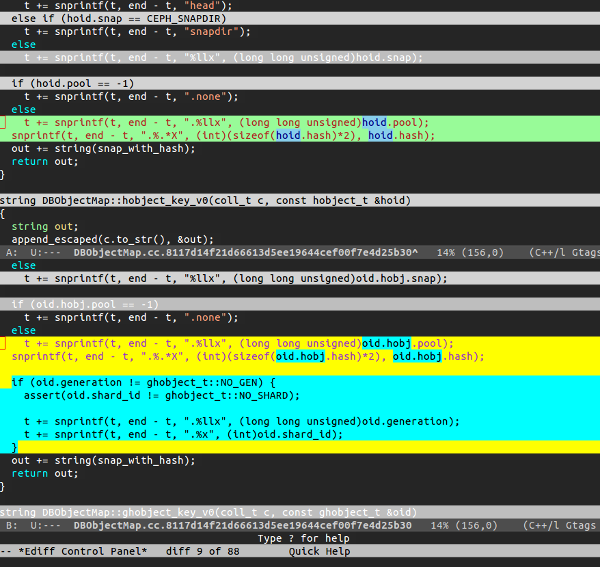

tip to review a large patch with magit and ediff

When reviewing a large changeset with magit, it can be difficult to separate meaningfull changes from purely syntactic substitutions. Using ediff to navigate the patch highlights the words changed between two lines instead of just showing a line removed and another added.

In the above screenshot the oid change to oid.hobj is a syntactic change where the new block after oid.generation != ghobject_t::NO_GEN deserves more attention.

Continue reading “tip to review a large patch with magit and ediff”

Ceph development statistics : 2010 to present

Running git_stats against Ceph master today produced the following output.

The stats before 2010 were trimmed because the report is too slow to navigate.

sudo apt-get install ruby1.9.1-dev ruby-mkrf sudo gem install git_stats git clone git@github.com:ceph/ceph.git cd ceph git_stats generate --from=07005fa1e501973846d666ed073ce64b45144d39

Ceph dialog : monitors and paxos

This is a dialog (also published on ceph.com) where Joao Luis, Ceph core developer with an intimate knowledge of the monitor, answers Loïc Dachary’s questions who discovers this area of the Ceph code base. Many answers are covered in Joao’s article Ceph’s New Monitor Changes (March 2013), with a different angle.

Continue reading “Ceph dialog : monitors and paxos”

Desktop based Ceph cluster for file sharing

July 1st 2013, Heinlein setup a Ceph cuttlefish ( now upgraded to version 0.61.8 ) cluster using the desktop of seven employees willing to host a ceph node and share part of their disk. The nodes are partly connected with 1Gb/s links and some only have 100Mb/s. The cluster supports a 4TB ceph file system

ceph-office$ df -h . Filesystem Size Used Avail Use% Mounted on x.x.x.x,y.y.y.y,z.z.z.z:/ 4,0T 2,0T 2,1T 49% /mnt/ceph-office

which is used as a temporary space to exchange files. On a typical day at least one desktop is switched off and on again. The cluster has been self healing since its installation, with the only exception of a placement group being stuck and fixed with a manual pg repair .

Continue reading “Desktop based Ceph cluster for file sharing”

Resizeable and resilient mail storage with Ceph

A common use case for Ceph is to store mail as objects using the S3 API. Although most mail servers do not yet support such a storage backend, deploying them on Ceph block devices is a beneficial first step. The disk can be resized live, while the mail server is running, and will remain available even when a machine goes down. In the following example it gains ~100GB every 30 seconds:

$ df -h /data/bluemind Filesystem Size Used Avail Use% Mounted on /dev/rbd2 1.9T 196M 1.9T 1% /data/bluemind $ sleep 30 ; df -h /data/bluemind Filesystem Size Used Avail Use% Mounted on /dev/rbd2 2.0T 199M 2.0T 1% /data/bluemind

When the mail system is upgraded to a S3 capable mail storage backend, it will be able to use the Ceph S3 API right away: Ceph uses the same underlying servers for both purposes ( block and object storage ).

Continue reading “Resizeable and resilient mail storage with Ceph”

HOWTO run a Ceph Q&A suite

Ceph has extensive Q&A suites that are made of individual teuthology tasks ( see btrfs.yaml for instance ). The schedule_suite.sh helper script could be used as follows to run the entire rados suite:

./schedule_suite.sh rados wip-5510 testing \ loic@dachary.org basic master plana

Where wip-5510 is the branch to be tested as compiled by gitbuilder ( which happens automatically after each commit ), testing is the kernel to run on the test machines which is relevant when using krbd, basic is the compilation flavor of the wip-5510 branch ( could be notcmalloc or gcov ) and master is the teuthology branch to use. plana specifies the type of machines to be used, which is only relevant if using the inktank lab.

The queue_host defined in .teuthology.yaml

queue_host: teuthology.dachary.org queue_port: 11300

will schedule a number of jobs ( ~300 for rados ) and send a mail when they complete.

name loic-2013-08-14_22:00:20-rados-wip-5510-testing-basic-plana INFO:teuthology.suite:Collection basic in /home/loic/src/ceph-qa-suite/suites/rados/basic INFO:teuthology.suite:Running teuthology-schedule with facets collection:basic clusters:fixed-2.yaml fs:btrfs.yaml msgr-failures:few.yaml tasks:rados_api_tests.yaml Job scheduled with ID 106579 INFO:teuthology.suite:Running teuthology-schedule with facets collection:basic clusters:fixed-2.yaml fs:btrfs.yaml msgr-failures:few.yaml tasks:rados_cls_all.yaml Job scheduled with ID 106580 .... INFO:teuthology.suite:Running teuthology-schedule with facets collection:verify 1thrash:none.yaml clusters:fixed-2.yaml fs:btrfs.yaml msgr-failures:few.yaml tasks:rados_cls_all.yaml validater:valgrind.yaml Job scheduled with ID 106880 Job scheduled with ID 106881

HOWTO valgrind Ceph with teuthology

Teuthology can run a designated daemon with valgrind and preserve the report for analysis. The notcmalloc flavor is preferred to silence valgrind errors unrelated to Ceph itself.

- install: project: ceph branch: wip-5510 flavor: notcmalloc

A daemon running with valgrind is much slower and warnings will show in the logs that should be marked as non relevant in this context:

log-whitelist:

- slow request

- clocks

- wrongly marked me down

- objects unfound and apparently lost

The first osd is marked to be run with valgrind:

- ceph:

valgrind:

osd.0: --tool=memcheck

After running teuthology with

./virtualenv/bin/teuthology -v --archive /tmp/wip-5510-valgrind \ --owner loic@dachary.org \ ~/private/ceph/targets.yaml \ ~/private/ceph/wip-5510-valgrind.yaml

errors may show

DEBUG:teuthology.run_tasks:Exception was not quenched, exiting: Exception: saw valgrind issues

INFO:teuthology.run:Summary data:

{duration: 344.2433888912201, failure_reason: saw valgrind issues, flavor: notcmalloc,

owner: loic@dachary.org, success: false}

INFO:teuthology.run:FAIL

And the valgrind XML report containing the details about the error can be retrieved from the /tmp/wip-5510-valgrind/remote/ubuntu@target1/log/valgrind/osd.0.log.gz

Continue reading “HOWTO valgrind Ceph with teuthology”

HOWTO install Ceph teuthology on OpenStack

Teuthology is used to run Ceph integration tests. It is installed from sources and will use newly created OpenStack instances as targets:

$ cat targets.yaml targets: ubuntu@target1.novalocal: ssh-rsa AAAAB3NzaC1yc2... ubuntu@target2.novalocal: ssh-rsa AAAAB3NzaC1yc2...

They allow password free ubuntu ssh connection with full sudo privileges from the machine running teuthology. A Ubuntu precise 12.04.2 target must be configured with:

$ wget -q -O- 'https://ceph.com/git/?p=ceph.git;a=blob_plain;f=keys/release.asc' | \ sudo apt-key add - $ echo ' ubuntu hard nofile 16384' > /etc/security/limits.d/ubuntu.conf

It can then be tried with a configuration file that does nothing but install Ceph and run the daemons.

$ cat noop.yaml check-locks: false roles: - - mon.a - osd.0 - - osd.1 - client.0 tasks: - install: project: ceph branch: stable - ceph:

The output should look like this:

$ ./virtualenv/bin/teuthology targets.yaml noop.yaml

INFO:teuthology.run_tasks:Running task internal.save_config...

INFO:teuthology.task.internal:Saving configuration

INFO:teuthology.run_tasks:Running task internal.check_lock...

INFO:teuthology.task.internal:Lock checking disabled.

INFO:teuthology.run_tasks:Running task internal.connect...

INFO:teuthology.task.internal:Opening connections...

DEBUG:teuthology.task.internal:connecting to ubuntu@teuthology2.novalocal

DEBUG:teuthology.task.internal:connecting to ubuntu@teuthology1.novalocal

...

INFO:teuthology.run:Summary data:

{duration: 363.5891010761261, flavor: basic, owner: ubuntu@teuthology, success: true}

INFO:teuthology.run:pass

Continue reading “HOWTO install Ceph teuthology on OpenStack”