The githubpy python library provides a thin layer on top of the GitHub V3 API, which is convenient because the official GitHub documentation can be used. The undocumented behavior of GitHub is outside of the scope of this library and needs to be addressed by the caller.

For instance creating a repository is asynchronous and checking for its existence may fail. Something similar to the following function should be used to wait until it exists:

def project_exists(self, name):

retry = 10

while retry > 0:

try:

for repo in self.github.g.user('repos').get():

if repo['name'] == name:

return True

return False

except github.ApiError:

time.sleep(5)

retry -= 1

raise Exception('error getting the list of repos')

def add_project(self):

r = self.github.g.user('repos').post(

name=GITHUB['repo'],

auto_init=True)

assert r['full_name'] == GITHUB['username'] + '/' + GITHUB['repo']

while not self.project_exists(GITHUB['repo']):

pass

Another example is merging a pull request. It sometimes fails (503, cannot be merged error) although it succeeds in the background. To cope with that, the state of the pull request should be checked immediately after the merge failed. It can either be merged or closed (although the GitHub web interface shows it as merged). The following function can be used to cope with that behavior:

def merge(self, pr, message):

retry = 10

while retry > 0:

try:

current = self.github.repos().pulls(pr).get()

if current['state'] in ('merged', 'closed'):

return

logging.info('state = ' + current['state'])

self.github.repos().pulls(pr).merge().put(

commit_message=message)

except github.ApiError as e:

logging.error(str(e.response))

logging.exception('merging ' + str(pr) + ' ' + message)

time.sleep(5)

retry -= 1

assert retry > 0

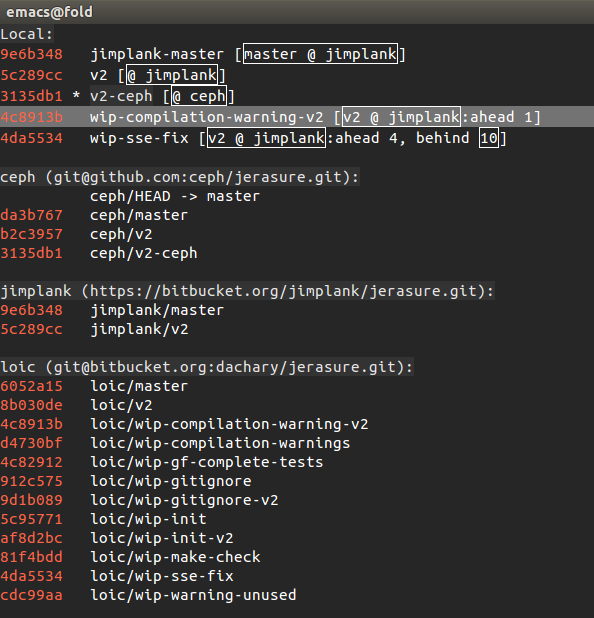

These two examples have been implemented as part of the ceph-workbench integration tests. The behavior described above can be reproduced by running the test in a loop during a few hours.