Although it is extremely unlikely to loose an object stored in Ceph, it is not impossible. When it happens to a Cinder volume based on RBD, knowing which has an object missing will help with disaster recovery.

Continue reading “What cinder volume is missing an RBD object ?”

Tell teuthology to use a local ceph-qa-suite directory

By default teuthology will clone the ceph-qa-suite repository and use the tasks it contains. If tasks have been modified localy, teuthology can be instructed to use a local directory by inserting something like:

suite_path: /home/loic/software/ceph/ceph-qa-suite

in the teuthology job yaml file. The directory must then be added to the PYTHONPATH

PYTHONPATH=/home/loic/software/ceph/ceph-qa-suite \ ./virtualenv/bin/teuthology --owner loic@dachary.org \ /tmp/work.yaml targets.yaml

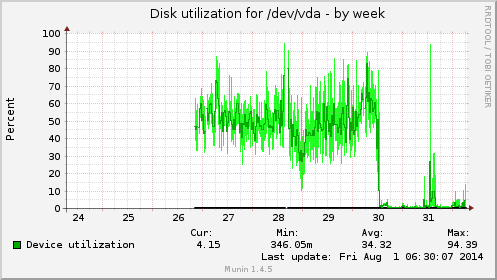

Temporarily disable Ceph scrubbing to resolve high IO load

In a Ceph cluster with low bandwidth, the root disk of an OpenStack instance became extremely slow during days.

When an OSD is scrubbing a placement group, it has a significant impact on performances and this is expected, for a short while. In this case, however it slowed down to the point where the OSD was marked down because it did not reply in time:

2014-07-30 06:43:27.331776 7fcd69ccc700 1 mon.bm0015@0(leader).osd e287968 we have enough reports/reporters to mark osd.12 down

To get out of this situation, both scrub and deep scrub were deactivated with:

root@bm0015:~# ceph osd set noscrub set noscrub root@bm0015:~# ceph osd set nodeep-scrub set nodeep-scrub

After a day, as the IO load remained stable confirming that no other factor was causing it, scrubbing was re-activated. The context causing the excessive IO load was changed and it did not repeat itself after another 24 hours, although scrubbing was confirmed to resume when examining the logs on the same machine:

2014-07-31 15:29:54.783491 7ffa77d68700 0 log [INF] : 7.19 deep-scrub ok 2014-07-31 15:29:57.935632 7ffa77d68700 0 log [INF] : 3.5f deep-scrub ok 2014-07-31 15:37:23.553460 7ffa77d68700 0 log [INF] : 7.1c deep-scrub ok 2014-07-31 15:37:39.344618 7ffa77d68700 0 log [INF] : 3.22 deep-scrub ok 2014-08-01 03:25:05.247201 7ffa77d68700 0 log [INF] : 3.46 deep-scrub ok

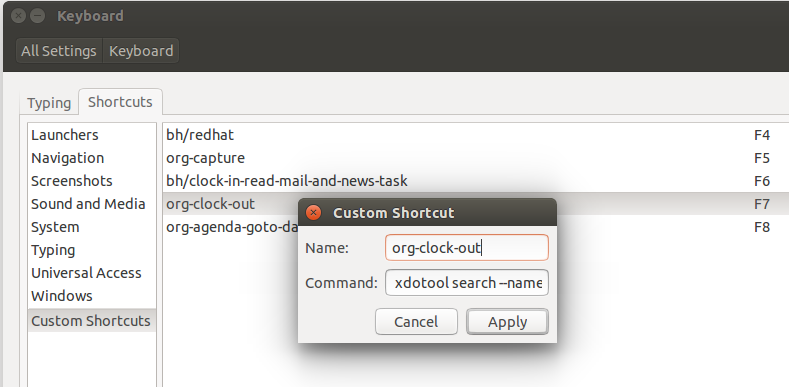

Global shortcuts for emacs org-mode on Ubuntu

Let say F7 is bound, in emacs, to the org-clock-out function of Org Mode as a shortcut to quickly stop the current clock accumulating the time spent on a given task.

(global-set-key (kbd "<f7>") 'org-clock-out)

F7 can be sent to the emacs window via the command line with

xdotool search --name 'emacs@fold' key F7

If emacs needs to be displayed to the user (in case it was iconified or on another desktop), the windowactivate command can be added:

xdotool search --name 'emacs@fold' windowactivate key F7

On Ubuntu 14.04 this command can be bound to the F7 regardless of which window has focus, via the shortcuts tab of the keyboard section of System Settings as shown below:

Ceph disaster recovery scenario

A datacenter containing three hosts of a non profit Ceph and OpenStack cluster suddenly lost connectivity and it could not be restored within 24h. The corresponding OSDs were marked out manually. The Ceph pool dedicated to this datacenter became unavailable as expected. However, a pool that was supposed to have at most one copy per datacenter turned out to have a faulty crush ruleset. As a result some placement groups in this pool were stuck.

$ ceph -s ... health HEALTH_WARN 1 pgs degraded; 7 pgs down; 7 pgs peering; 7 pgs recovering; 7 pgs stuck inactive; 15 pgs stuck unclean; recovery 184/1141208 degraded (0.016%) ...

puppet-ceph update

End of last year, a new puppet-ceph module was bootstrapped with the ambitious goal to re-unite the dozens of individual efforts. I’m very happy with what we’ve accomplished. We are making progress although our community is mixed, but more importantly, we do things differently.

Continue reading “puppet-ceph update”

Ceph erasure code jerasure plugin benchmarks (Highbank ARMv7)

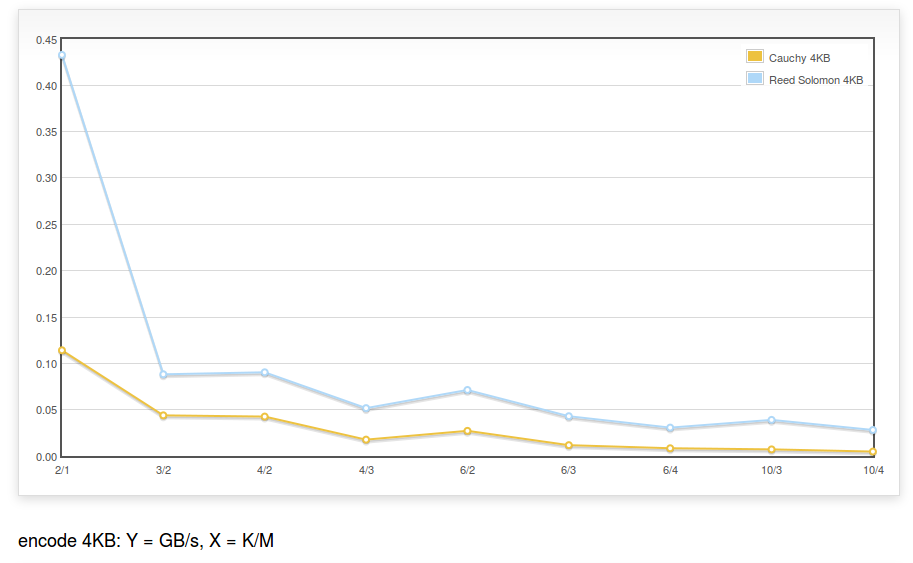

The benchmark described for Intel Xeon is run with a Highbank ARMv7 Processor rev 0 (v7l) processor (the maker of the processor was Calxeda ), using the same codebase:

The encoding speed is ~450MB/s for K=2,M=1 (i.e. a RAID5 equivalent) and ~25MB/s for K=10,M=4.

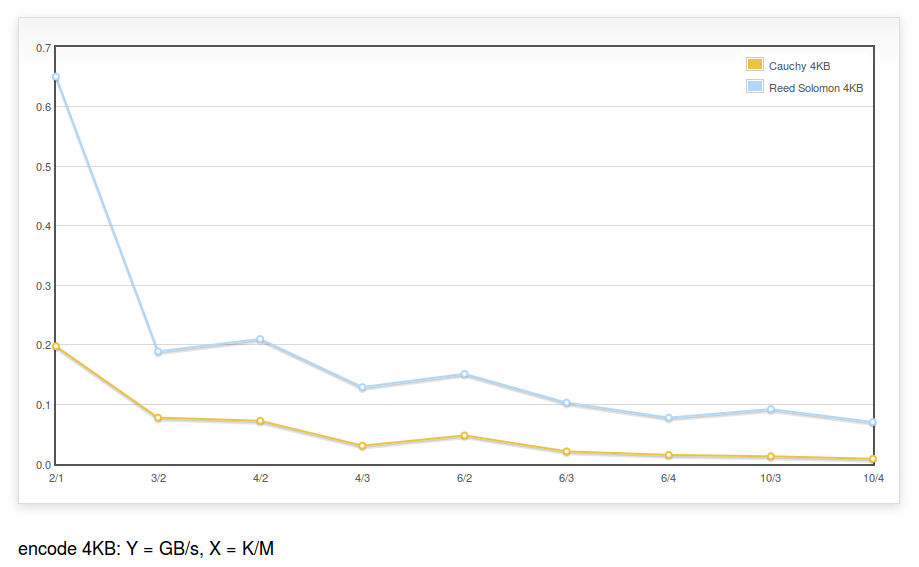

It is also run with Highbank ARMv7 Processor rev 2 (v7l) (note the 2):

The encoding speed is ~650MB/s for K=2,M=1 (i.e. a RAID5 equivalent) and ~75MB/s for K=10,M=4.

Note: The code of the erasure code plugin does not contain any NEON optimizations.

Continue reading “Ceph erasure code jerasure plugin benchmarks (Highbank ARMv7)”

workaround DNSError when running teuthology-suite

Note: this is only useful for people with access to the Ceph lab.

When running a Ceph integration tests using teuthology, it may fail because of a DNS resolution problem with:

$ ./virtualenv/bin/teuthology-suite --base ~/software/ceph/ceph-qa-suite \ --suite upgrade/firefly-x \ --ceph wip-8475 --machine-type plana \ --email loic@dachary.org --dry-run 2014-06-27 INFO:urllib3.connectionpool:Starting new HTTP connection (1): ... requests.exceptions.ConnectionError: HTTPConnectionPool(host='gitbuilder.ceph.com', port=80): Max retries exceeded with url: /kernel-rpm-centos6-x86_64-basic/ref/testing/sha1 (Caused by: [Errno 3] name does not exist)

It may be caused by DNS propagation problems and pointing to the ceph.com may work better. If running bind, adding the following in /etc/bind/named.conf.local will forward all ceph.com related DNS queries to the primary server (NS1.DREAMHOST.COM i.e. 66.33.206.206), assuming /etc/resolv.conf is set to use the local DNS server first:

zone "ceph.com."{

type forward ;

forward only ;

forwarders { 66.33.206.206; } ;

};

zone "ipmi.sepia.ceph.com" {

type forward;

forward only;

forwarders {

10.214.128.4;

10.214.128.5;

};

};

zone "front.sepia.ceph.com" {

type forward;

forward only;

forwarders {

10.214.128.4;

10.214.128.5;

};

};

The front.sepia.ceph.com zone will resolve machine names allocated by teuthology-lock and used as targets such as:

targets: ubuntu@saya001.front.sepia.ceph.com: ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABA ... 8r6pYSxH5b

Locally repairable codes and implied parity

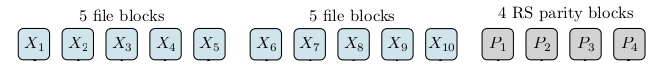

When a Ceph OSD is lost in an erasure coded pool, it can be recovered using the others.

For instance if OSD X3 was lost, OSDs X1, X2, X4 to X10 and P1 to P4 are retrieved by the primary OSD and the erasure code plugin uses them to rebuild the content of X3.

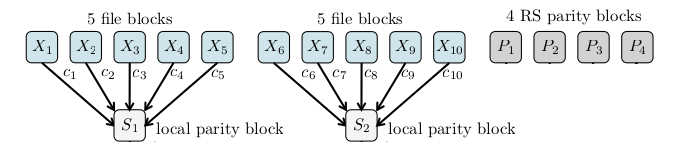

Locally repairable codes are designed to lower the bandwidth requirements when recovering from the loss of a single OSD. A local parity block is calculated for each five blocks : S1 and S2. When the X3 OSD is lost, instead of retrieving blocks from 13 OSDs, it is enough to retrieve X1, X2, X4, X5 and S1, that is 5 OSDs.

In some cases, local parity blocks can help recover from the loss of more blocks than any individual encoding function can. In the example above, let say five blocks are lost: X1, X2, X3, X4 and X8. The block X8 can be recovered from X6, X7, X9, X10 and S2. Now that only four blocks are missing, the initial parity blocks are enough to recover. The combined effect of local parity blocks and the global parity blocks acts as if there was implied parity block.

Ceph erasure code jerasure plugin benchmarks

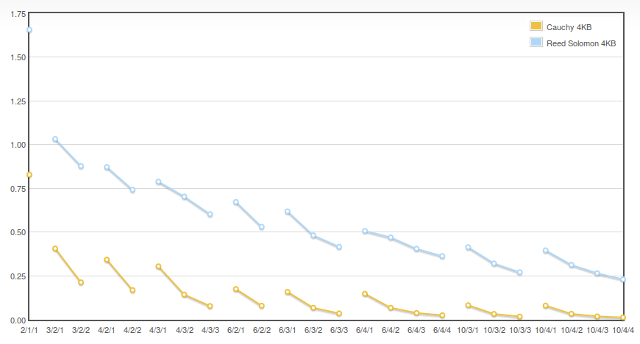

On a Intel(R) Xeon(R) CPU E5-2630 0 @ 2.30GHz processor (and all SIMD capable Intel processors) the Reed Solomon Vandermonde technique of the jerasure plugin, which is the default in Ceph Firefly, performs better.

The chart is for decoding erasure coded objects. Y are in GB/s and the X are K/M/erasures. For instance 10/3/2 is K=10,M=3 and 2 erasures, meaning each object is sliced in K=10 equal chunks and M=3 parity chunks have been computed and the jerasure plugin is used to recover from the loss of two chunks (i.e. 2 erasures).

Continue reading “Ceph erasure code jerasure plugin benchmarks”