A datacenter containing three hosts of a non profit Ceph and OpenStack cluster suddenly lost connectivity and it could not be restored within 24h. The corresponding OSDs were marked out manually. The Ceph pool dedicated to this datacenter became unavailable as expected. However, a pool that was supposed to have at most one copy per datacenter turned out to have a faulty crush ruleset. As a result some placement groups in this pool were stuck.

$ ceph -s ... health HEALTH_WARN 1 pgs degraded; 7 pgs down; 7 pgs peering; 7 pgs recovering; 7 pgs stuck inactive; 15 pgs stuck unclean; recovery 184/1141208 degraded (0.016%) ...

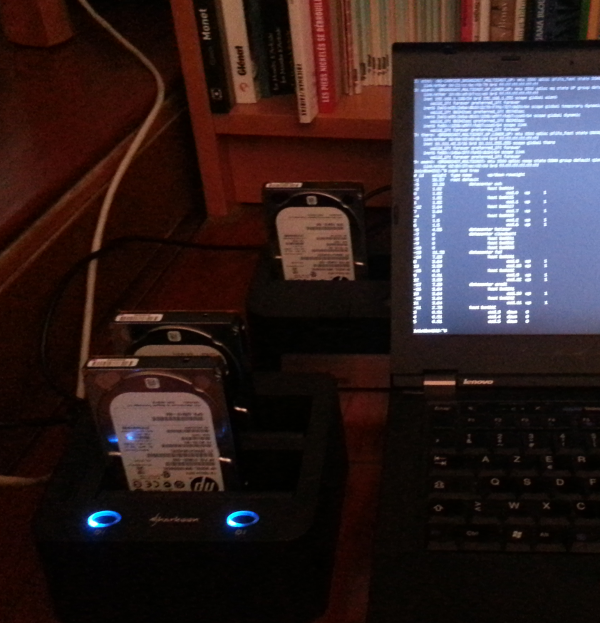

The disks are extracted from the rackable machines and plugged in a laptop via USB shoes. To prevent data loss, their content are copied to a single machine via

mount /dev/sdc1 /mnt/ rsync -aX /mnt/ /var/lib/ceph/osd/ceph-$(cat /mnt/whoami)/

where the X is to copy xattr. The journal is copied from the dedicated partition with

rm /var/lib/ceph/osd/ceph-$(cat /mnt/whoami)/journal dd if=/mnt/journal of=/var/lib/ceph/osd/ceph-$(cat /mnt/whoami)/journal

where the first rm removes the symbolic link to the destination and the dd actually copies the content of the journal.

The /etc/ceph/ceph.conf is copied from the live cluster to the temporary host bm4202 and the latest emperor release is installed. The OSDs are started one by one to observe their progress:

$ start ceph-osd id=3 $ tail -f /var/log/ceph/ceph-osd.3.log 2014-07-04 16:55:12.217359 7fa264bb1800 0 ceph version 0.72.2 (a913ded2ff138aefb8cb84d347d72164099cfd60), process ceph-osd, pid 450 ... $ ceph osd tree ... -11 2.73 host bm4202 3 0.91 osd.3 up 0 4 0.91 osd.4 up 0 5 0.91 osd.5 up 0

After a while the necessary information is recovered from OSDs marked out and there is no more placement groups in the stuck state.

$ ceph -s ... pgmap v12582933: 856 pgs: 856 active+clean ...

Can you elaborate on what was wrong with their CRUSH map?

The failure domain for the crush ruleset used for the pool was the host. As a result some of the PG had the two copies for a given object within the same datacenter. The pool should have been associated with a crush ruleset with a datacenter failure domain. In other words, a crush ruleset where no two copies of the same object is located in the same datacenter.

I have a ceph cluster wiht 6 nodes but i lost all of my monitors. Try to back the monitors without success.. no ceph commands response so… all of my osd not start, but the device chow mount success.

Is there a way to create the monitor and get back the rbd data from all OSD…..?

Thanks