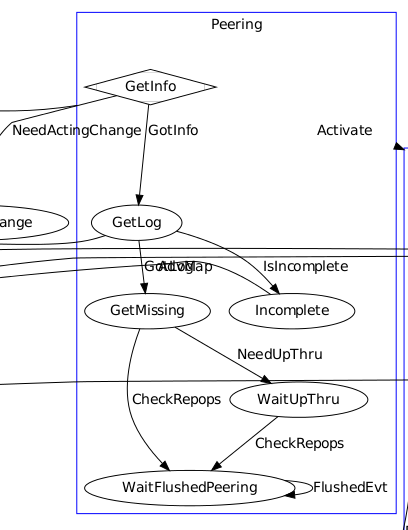

The Peering state machine ( based on the Boost Statechart Library ) is in charge of making sure the OSDs supporting the placement groups are available, as described in the high level description of the peering process. It will then move to the Active state machine which will bring the placement group in a consistent state and handle normal operations.

The placement groups rely on OSDs to exchange informations. For instance when the primary OSD receives a placement group creation message it will forward the information to the placement group who will translate it into events for the newly created state machine

The OSDs also provide a work queue dedicated to peering where events are inserted to be processed asynchronously.

Continue reading “Ceph Placement Groups peering”

GLOCK is my favorite Cloud stack

GLOCK stands for GNU, Linux, OpenStack, Ceph and KVM. GNU is the free Operating System that guarantees my freedom and independance, Linux is versatile enough to accommodate for the heterogeneous hardware I’m using, OpenStack allows me to cooperatively run a IaaS with my friends and the non-profits I volunteer for, Ceph gives eternal life to my data and KVM will be maintained for as long as I live.

Ceph disk requirements will be lower : a new backend is coming

When evaluating Ceph to run a new storage service, the replication factor only matters after the hardware provisionned from the start is almost full. It may happen months after the first user starts to store data. In the meantime a new storage backend ( erasure encoded ) reducing up to 50% of the hardware requirements is being developped in Ceph.

It does not matters to save disk from the beginning : it is not used anyway. The question is to figure out when the erasure encoded will be ready to double the usage value of the storage already in place.

Continue reading “Ceph disk requirements will be lower : a new backend is coming”

Merging Ceph placement group logs

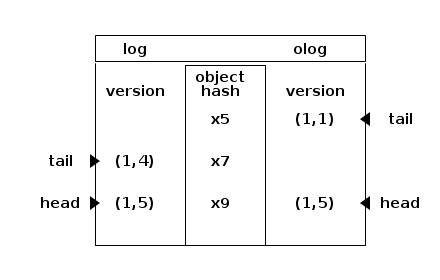

Ceph stores objects in pools which are divided in placement groups. When an object is created, modified or deleted, the placement group logs the operation. If an OSD supporting the placement group is temporarily unavailable, the logs are used for recovery when it comes back.

The column to the left shows the log entries. They are ordered, oldest first. For instance : 1,4 is epoch 1 version 4. The tail displayed to the left points to the oldest entry of the log. The head points to the most recent. The column to the right shows the olog : they are authoritative and will be merged into log which may imply to discard divergent entries. All log entries relate to an object which is represented by its hash in the column in the middle : x5, x7, x9.

The use cases implemented by PGLog::merge_log are demonstrated by individual test cases.

Continue reading “Merging Ceph placement group logs”

Using the largest OpenStack tenant to define an architecture that scales out

The service offering of public cloud providers is designed to match many potential customers. It would be impossible to design the underlying architecture ( hardware and software ) to accommodate the casual individual as well as the need of the CERN. No matter how big the cloud provider, there are users for whom it is both more cost effective and efficient to design a private cloud.

The size of the largest user could be used to simplify the architecture and resolve bottlenecks when it scales. The hardware and software are designed to create a production unit that is N times the largest user. For instance, if the largest user requires 1PB of storage, 1,000 virtual machines and 10Gb/s of sustained internet transit, the production unit could be designed to accommodate a maximum of N = 10 users of this size. If the user base grows but the size of the largest user does not change, independent production units are built. All production units have the same size and can be multiplied.

Each production unit is independent from the others and can operate standalone. A user confined in a production unit does not require interactions, directly or indirectly, with other production units. While this is true most of the time, a live migration path must be open temporarily between production units to balance their load. For instance, when the existing production units are too full and a production unit becomes operational, some users are migrated to the new production unit.

Although block and instance live migration are supported within an OpenStack cluster, this architecture would require the ability to live migrate blocks and instances between unrelated OpenStack clusters. In the meantime, cells and aggregates can be used. The user expects this migration to happen transparently when the provider does this behind the scene. But if she/he is willing to change from one OpenStack provider to the other, the same mechanism could eventually be used. Once the user ( that is the tenant in the OpenStack parlance ) is migrated, the credentials can be removed.

Ceph placement groups backfilling

Ceph stores objects in pools which are divided in placement groups.

+---------------------------- pool a ----+ |+----- placement group 1 -------------+ | ||+-------+ +-------+ | | |||object | |object | | | ||+-------+ +-------+ | | |+-------------------------------------+ | |+----- placement group 2 -------------+ | ||+-------+ +-------+ | | |||object | |object | ... | | ||+-------+ +-------+ | | |+-------------------------------------+ | | .... | | | +----------------------------------------+ +---------------------------- pool b ----+ |+----- placement group 1 -------------+ | ||+-------+ +-------+ | | |||object | |object | | | ||+-------+ +-------+ | | |+-------------------------------------+ | |+----- placement group 2 -------------+ | ||+-------+ +-------+ | | |||object | |object | ... | | ||+-------+ +-------+ | | |+-------------------------------------+ | | .... | | | +----------------------------------------+ ...

The placement group is supported by OSDs to store the objects. For instance objects from the placement group 1 of the pool a will be stored in files managed by an OSD on a designated disk. They are daemons running on machines where storage is available. For instance, a placement group supporting three replicates will have three OSDs at his disposal : one OSDs is the primary (OSD 0) and the other two store copies (OSD 1 and OSD 2).

+-------- placement group 1 ---------+

|+----------------+ +----------------+ |

|| object A | | object B | |

|+----------------+ +----------------+ |

+---+-------------+-----------+--------+

| | |

| | |

OSD 0 OSD 1 OSD 2

+------+ +------+ +------+

|+---+ | |+---+ | |+---+ |

|| A | | || A | | || A | |

|+---+ | |+---+ | |+---+ |

|+---+ | |+---+ | |+---+ |

|| B | | || B | | || B | |

|+---+ | |+---+ | |+---+ |

+------+ +------+ +------+

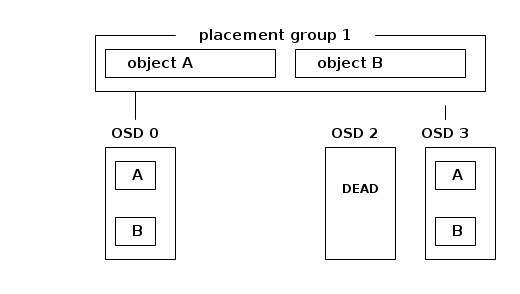

Whenever an OSD dies, the placement group information and the associated objects stored in this OSD are gone and need to be reconstructed using another OSD.

+-------- placement group 1 ---------+

|+----------------+ +----------------+ |

|| object A | | object B | |

|+----------------+ +----------------+ |

+---+-------------+-----------------+--+

| | |

| | |

OSD 0 OSD 1 OSD 2 OSD 3

+------+ +------+ +------+ +------+

|+---+ | |+---+ | | | |+---+ |

|| A | | || A | | | | || A | |

|+---+ | |+---+ | | DEAD | |+---+ |

|+---+ | |+---+ | | | |+---+ >----- last_backfill

|| B | | || B | | | | || B | |

|+---+ | |+---+ | | | |+---+ |

+------+ +------+ +------+ +------+

The objects from the primary ( OSD 0 ) are copied to OSD 3 : this is called backfilling. It involves the primary ( OSD 0) and the backfill peer ( OSD 3) scanning over their content and copying the objects which are different or missing from the primary to the backfill peer. Because this may take a long time, the last_backfill attribute is tracked for each local placement group copy (i.e. the placement group information that resides on OSD 3 ) indicating how far the local copy has been backfilled. In the case that the copy is complete, last_backfill is hobject_t::max().

OSD 3

+----------------+

|+--- object --+ |

|| name : B | |

|| key : 2 | |

|+-------------+ |

|+--- object --+ >----- last_backfill

|| name : A | |

|| key : 5 | |

|+-------------+ |

| |

| .... |

+----------------+

Object names are hashed into an integer that can be used to order them. For instance, the object B above has been hashed to key 2 and the object A above has been hashed to key 5. The last_backfill attribute of the placement group draws the limit separating the objects that have already been copied from other OSDs and those in the process of being copied. The objects that are lower than last_backfill have been copied ( that would be object B above ) and the objects that are greater than last_backfill are going to be copied.

Backfilling is expensive and placement groups do not exclusively rely on it to recover from failure. The placement groups logs their changes, for instance deleting an object or modifying an object. When and OSD is unavailable for a short period of time, it may be cheaper to replay the logs.

Bio++: efficient, extensible libraries and tools for computational molecular evolution

Efficient algorithms and programs for the analysis of the ever-growing amount of biological sequence data are strongly needed in the genomics era. The pace at which new data and methodologies are generated calls for the use of pre-existing, optimized – yet extensible – code, typically distributed as libraries or packages. This motivated the Bio++ project, aiming at developing a set of C++ libraries for sequence analysis, phylogenetics, population genetics and molecular evolution. The main attractiveness of Bio++ is the extensibility and reusability of its components through its object-oriented design, without compromising on the computer-efficiency of the underlying methods. We present here the second major release of the libraries, which provides an extended set of classes and methods. These extensions notably provide built-in access to sequence databases and new data structures for handling and manipulating sequences from the omics era, such as multiple genome alignments and sequencing reads libraries. More complex models of sequence evolution, such as mixture models and generic n-tuples alphabets, are also included.

The article was published May 21st, 2013. Read the full article : Bio++: efficient, extensible libraries and tools for computational molecular evolution

Virtualizing legacy hardware in OpenStack

A five years old hardware is being decommissioned and hosts fourteen vservers on a Debian GNU/Linux lenny running a 2.6.26-2-vserver-686-bigmem linux kernel. The April non profit relies on these services (mediawiki, pad, mumble, etc. ) for the benefit of its 5,000 members and many working groups. Instead of migrating each vserver individually to an OpenStack instance, it was decided that the vserver host would be copied over to an OpenStack instance.

The old hardware has 8GB of RAM, 150GB disk and a dual Xeon totaling 8 cores. The munin statistics show that no additional memory is needed, the disk is half full and an average of one core is used at all times. A 8GB RAM, 150GB disk and dual core openstack instance is prepared. The instance will be booted from a 150GB volume placed on the same hardware to get maximum disk I/O speed.

After the volume is created, it is mounted from the OpenStack node and the disk of the old machine is rsync’ed to it. It is then booted after modifying a few files such as fstab. The OpenStack node is in the same rack and the same switch as the old hardware. The IP is removed from the interface of the old hardware and it is bound to the OpenStack instance. Because it is running on nova-network with multi-host activated, it is bound to the interface of the OpenStack node which can take over immediately. The public interface of the node is set as an ARP proxy to advertise the bridge where the instance is connected. The security group of the instance are disabled ( by opening all protocols and ports ) because a firewall is running in the instance.

Continue reading “Virtualizing legacy hardware in OpenStack”

OpenStack Upstream University training

Upstream University training for OpenStack contributors include a live session where students contribute to a Lego town. They have to comply with the coding standards imposed by the existing buildings. More than fifteen participants created an impressive city within a few hours during the session held in may 2013. The images speak for themselves. The next sessions will be in Paris in June and Portland in July.

Continue reading “OpenStack Upstream University training”

Installing ceph with ceph-deploy

A ceph-deploy package is created for Ubuntu raring and installed with

dpkg -i ceph-deploy_0.0.1-1_all.deb

A ssh key is generated without a password and copied over to the root .ssh/authorized_keys file of each host on which ceph-deploy will act:

# ssh-keygen Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: ca:1f:c3:ce:8d:7e:27:54:71:3b:d7:31:32:14:ba:68 root@bm0014.the.re The key's randomart image is: +--[ RSA 2048]----+ | .o. | | oo.o | | . oo.+| | . o o o| | SE o o | | . o. . | | o +. | | + =o . | | .*..o | +-----------------+ # for i in 12 14 15 do ssh bm00$i.the.re cat \>\> .ssh/authorized_keys < .ssh/id_rsa.pub done

Each host is installed with Ubuntu raring and has a spare, unused, disk at /dev/sdb. The ceph packages are installed with:

ceph-deploy install bm0012.the.re bm0014.the.re bm0015.the.re

The short version of each FQDN is added to /etc/hosts on each host, because ceph-deploy will assume that it exists:

for host in bm0012.the.re bm0014.the.re bm0015.the.re do getent hosts bm0012.the.re bm0014.the.re bm0015.the.re | \ sed -e 's/\.the\.re//' | ssh $host cat \>\> /etc/hosts done

The ceph cluster configuration is created with:

# ceph-deploy new bm0012.the.re bm0014.the.re bm0015.the.re

and the corresponding mon are deployed with

ceph-deploy mon create bm0012.the.re bm0014.the.re bm0015.the.re

Even after the command returns, it takes a few seconds for the keys to be generated on each host: the ceph-mon process shows when it is complete. Before creating the osd, the keys are obtained from a mon with:

ceph-deploy gatherkeys bm0012.the.re

The osds are then created with:

ceph-deploy osd create bm0012.the.re:/dev/sdb bm0014.the.re:/dev/sdb bm0015.the.re:/dev/sdb

After a few seconds the cluster stabilizes, as shown with

# ceph -s

health HEALTH_OK

monmap e1: 3 mons at {bm0012=188.165:6789/0,bm0014=188.165:6789/0,bm0015=188.165:6789/0}, election epoch 24, quorum 0,1,2 bm0012,bm0014,bm0015

osdmap e14: 3 osds: 3 up, 3 in

pgmap v106: 192 pgs: 192 active+clean; 0 bytes data, 118 MB used, 5583 GB / 5583 GB avail

mdsmap e1: 0/0/1 up

A 10GB RBD is created, mounted and destroyed with:

# rbd create --size 10240 test1 # rbd map test1 --pool rbd # mkfs.ext4 /dev/rbd/rbd/test1 # mount /dev/rbd/rbd/test1 /mnt # df -h /mnt Filesystem Size Used Avail Use% Mounted on /dev/rbd1 9.8G 23M 9.2G 1% /mnt # umount /mnt # rbd unmap /dev/rbd/rbd/test1 # rbd rm test1 Removing image: 100% complete...done.