For months I’ve asked people working with puppet modules on a daily basis for a HOWTO that I could follow to setup a new cluster with the Grizzly OpenStack release. Such a HOWTO is not needed for people who develop the modules or deploy OpenStack for a living. It is however very helpful for the casual system administrator willing to get it running in a few hours, all by herself/himself.

The packstack seems to be exactly that : a walkthru of a well tested procedure that anyone with a basic understanding of what OpenStack is can rely on. It requires an RPM based distribution and this may be a significant effort for someone used to DEB based operating systems.

For Ubuntu users, the kickstack project was started in summer 2013 and targets hands on sessions, with the declared goal to make it easy for people new to both OpenStack and puppet. Later on, it inspired Dan Bode to use a new approach based on dependency injection to implement openstack-installer for Cisco.

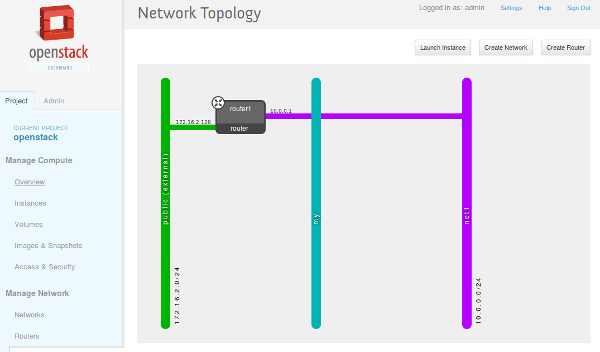

The proposed HOWTO uses openstack-installer to deploy OpenStack against an existing Ceph cluster and provides:

- keystone

- nova ( kvm )

- quantum ( openvswitch + gre )

- cinder ( Ceph backend )

- horizon

- glance ( Ceph backend )

Continue reading “HOWTO OpenStack Grizzly and Ceph with Puppet on Ubuntu 12.04”