razor can be used to deploy OpenStack. However, because it has been released mid 2012, debugging problems is often required. A razor virtual machine is created in an OpenStack cluster configured to allow network boot an OpenStack instance and nested virtual machines. When a new virtual machine instance is created in the same tenant as the razor machine, it gets a pixie boot from razor.

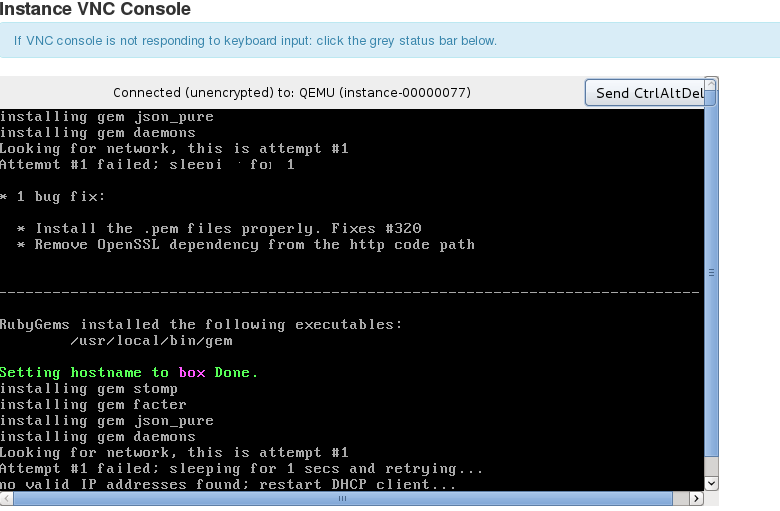

Testing and debugging razor can then be done within OpenStack and does not require dedicated hardware, as shown in the following screenshot of the VNC console of a virtual machine being deployed from razor ( it shows the razor microkernel booting ):

Installing razor

The virtual machine created to be the razor host will also be the puppet master for OpenStack, as instructed in the HOWTO. It will run mongodb and nodejs in addition to the puppet master. The recommended flavor for the virtual machine is 1GB of RAM and 10GB disk.

Preparing the OpenStack cluster

A razor virtual machine is created in an OpenStack cluster configured to allow network boot an OpenStack instance and nested virtual machines. When a new virtual machine instance is created in the same tenate as the razor machine, it gets a pixie boot from razor.

The manifest provided by the razor howto set the role of the OpenStack controller ( i.e. the one providing API, the horizon dashboard etc.) depending on its MAC address. A virtual machine is created with nova boot and its MAC address extracted with:

# ip link show dev eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT qlen 1000

link/ether fa:16:3e:0d:1e:6b brd ff:ff:ff:ff:ff:ff

and used to modify the relevant section of /etc/puppet/manifests/site.pp with fa:16:3e:0d:1e:6b as follows:

# a tag to identify my

rz_tag { "mac_eth0_of_the_controller":

tag_label => "mac_eth0_of_the_controller",

tag_matcher => [ {

'key' => 'mk_hw_nic1_serial',

'compare' => 'equal',

'value' => "fa:16:3e:0d:1e:6b",

'inverse' => "false",

} ],

}

# a tag to identify my

rz_tag { "not_mac_eth0_of_the_controller":

tag_label => "not_mac_eth0_of_the_controller",

tag_matcher => [ {

'key' => 'mk_hw_nic1_serial',

'compare' => 'equal',

'value' => "fa:16:3e:0d:1e:6b",

'inverse' => "true",

} ],

}

so that all other machines will be installed to be compute nodes. The controller is running the rabbitmq daemon which is sensitive to resource shortage : if the resources (disk or ram) drop under a threshold, the queue will freeze until more resources are made available. For this reason the controller VM must be configued with at least 10GB of disk and 2GB of RAM.

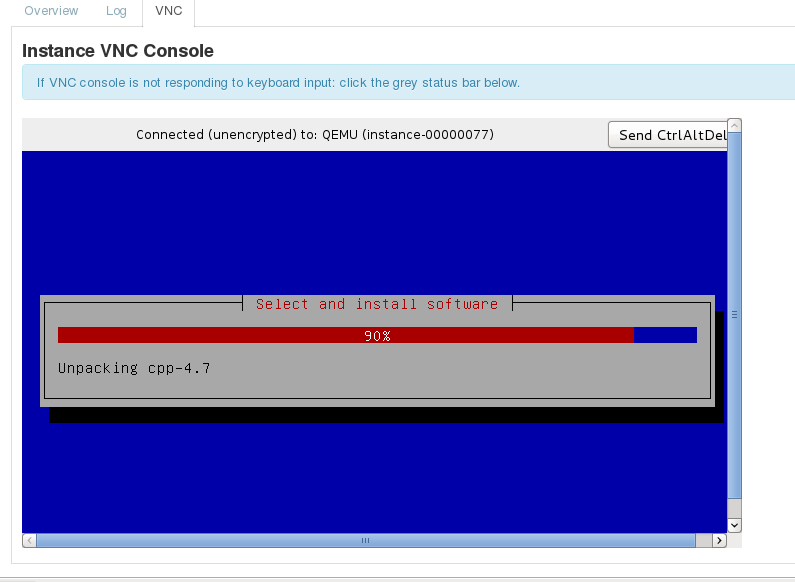

Installing the controller

The controller will automatically be installed by razor and the progress can be followed using the VNC console as shown in the following screenshot.

When the installation stops, it is possible to login using the password set in the /etc/puppet/manifests/site.pp:

rz_model { 'controller_model':

ensure => present,

description => 'Controller Wheezy Model',

image => 'debian-wheezy-7.0-amd64.iso',

metadata => {'domainname' => 'novalocal',

'hostname_prefix' => 'controller',

'root_password' => 'XXXXXX'},

template => 'debian_wheezy',

}

Retry the controller installation

While debugging razor, it is necessary to be able to restart the controller installation from scratch and wipe out any traces of the previous attempts.

The controller virtual machine is known to razor as being an active model and shows with:

# razor active_model get

Active Models:

Label State Node UUID Broker Bind # UUID

controller_policy broker_fail 4mmJd7rUxeYaFCOc5pihaR puppet_broker 5 4tfhtXGEKseT78BcnhxpF9

When rebooting the controller, the State of the active model determines what razor will do. Removing the active model with

# razor active_model remove 4tfhtXGEKseT78BcnhxpF9

will force razor to forget all it knows about the virtual machine and start over from scratch.

The puppet master should also be reset. The controller will register itself with a certificate that shows with

# puppetca list --all + "a3c9eb40fe02012ff1307a163e128b2f" (14:89:F6:C6:74:C2:A4:CD:0C:2D:43:6D:B8:D7:29:29) + "wheezy.novalocal" (38:F3:28:54:CA:DB:9E:6D:27:A9:05:C7:FD:18:86:A3) (alt names: "DNS:puppet", "DNS:puppet.novalocal", "DNS:wheezy.novalocal")

and can be removed with

# puppetca clean a3c9eb40fe02012ff1307a163e128b2f notice: Revoked certificate with serial 11 notice: Removing file Puppet::SSL::Certificate a3c9eb40fe02012ff1307a163e128b2f at '/var/lib/puppet/ssl/ca/signed/a3c9eb40fe02012ff1307a163e128b2f.pem' notice: Removing file Puppet::SSL::Certificate a3c9eb40fe02012ff1307a163e128b2f at '/var/lib/puppet/ssl/certs/a3c9eb40fe02012ff1307a163e128b2f.pem'

If the puppet master stored information related to the node in the database, it can be removed with the following SQL script:

SET @host_id = 4; DELETE FROM fact_values WHERE host_id=@host_id; DELETE FROM param_values WHERE resource_id IN ( SELECT id FROM resources WHERE host_id=@host_id ); DELETE FROM resource_tags WHERE resource_id IN ( SELECT id FROM resources WHERE host_id=@host_id ); DELETE FROM resources WHERE host_id=@host_id; DELETE FROM hosts WHERE id=@host_id;

The SET @host_id = 4; line is set with the value displayed with

mysql -e "select id from hosts where name = 'a3c9eb40fe02012ff1307a163e128b2f'" puppet

where the name field is the one displayed with puppetca list –all.

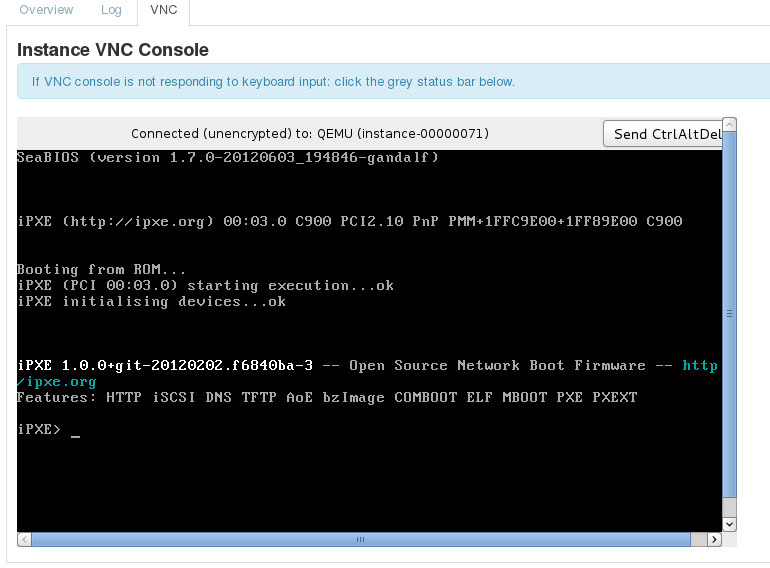

Debugging booting from razor

When the controller virtual machine attempts to boot from the network, it will show a VNC console that looks like this:

If it does not, it means kvm does not attempt to boot from the network and network boot an OpenStack instance should be checked.

If it does boot from razor but fails before starting to install the operating system, it is worth checking the razor logs at /opt/razor/log/. To make sure the razor DHCP server picks up the controller virtual machine DHCP request, check the logs in /var/log/daemon.log. If a file is not found, look into /opt/razor/image/mk which contains the files used by the razor micro kernel or /opt/razor/image/os which contain the files extracted from the boot image.

Debugging preseed

After booting from the os kernel found in /opt/razor/image/os, the installation is driven by the files found in

/opt/razor/lib/project_razor/model/debian/wheezy: total used in directory 32 available 4952892 -rw-r--r-- 1 root root 392 Oct 1 00:39 boot_install.erb -rw-r--r-- 1 root root 267 Oct 1 00:39 boot_local.erb -rw-r--r-- 1 root root 729 Oct 1 00:39 kernel_args.erb -rw-r--r-- 1 root root 2547 Oct 22 10:34 os_boot.erb -rw-r--r-- 1 root root 319 Oct 1 00:39 os_complete.erb -rw-r--r-- 1 root root 2633 Oct 22 09:47 preseed.erb

The kernel_args.erb file contains #{api_svc_uri}/policy/callback/#{policy_uuid}/preseed/file which translates into the preseed.erb file.

The files in /opt/razor/lib/project_razor/model/debian/wheezy can be modified and will be used at the next reboot. However, they will be reset when puppet agent -vt is run on the razor host. A git diff can be used to save the patch including the changes and apply it again when puppet agent -vt completes.

The installation progress for the controller can be displayed by asking the logs of the razor active model as follows:

# razor active_model get 6mdtdf4uWJMpDJRTbFbslD logs

State Action Result

init mk_call n/a

init boot_call Starting Debian model install

init preseed_file Replied with preseed file

init=>preinstall preseed_start Acknowledged preseed read

preinstall=>postinstall preseed_end Acknowledged preseed end

postinstall postinstall_inject n/a

postinstall boot_call Replied with os boot script

postinstall added_puppet_hosts_ok n/a

postinstall set_hostname_ok n/a

postinstall apt_update_ok n/a

postinstall apt_upgrade_ok n/a

postinstall apt_install_ok n/a

postinstall os_boot n/a

postinstall=>os_complete os_final Replied with os complete script

os_complete=>broker_fail broker_agent_handoff n/a

The line

postinstall apt_update_ok n/a

is created by the following line

apt-get -y update

[ "$?" -eq 0 ] && curl <%= callback_url("postinstall", "apt_update_ok") %> ||

curl <%= callback_url("postinstall", "apt_update_fail") %>

in the /opt/razor/lib/project_razor/model/debian/wheezy/os_boot.erb file. However, it is not always straightforward to match the logs line with the corresponding action from razor.

Debugging the puppet manifest

After successfully installing the operating system, razor will try to install and run puppet as found in /opt/razor/lib/project_razor/broker/puppet.rb:

@run_script_str << session.exec!("bash /tmp/puppet_init.sh |& tee /tmp/puppet_init.out")

and the puppet_init.sh script is compiled from agent_install.erb

install_script = File.join(File.dirname(__FILE__), "puppet/agent_install.erb")

contents = ERB.new(File.read(install_script)).result(binding)

which is found at /opt/razor/lib/project_razor/broker/puppet/agent_install.erb. If the razor active model logs end with:

os_complete=>broker_fail broker_agent_handoff n/a

the output of the failed script can be read on the controller instance at /tmp/puppet_init.out.

The problem can be fixed manually from the shell on the controller and the /opt/razor/lib/project_razor/broker/puppet/agent_install.erb script can be modified once the solution is found.