Ceph stores objects in pools which are divided in placement groups. When an object is created, modified or deleted, the placement group logs the operation. If an OSD supporting the placement group is temporarily unavailable, the logs are used for recovery when it comes back.

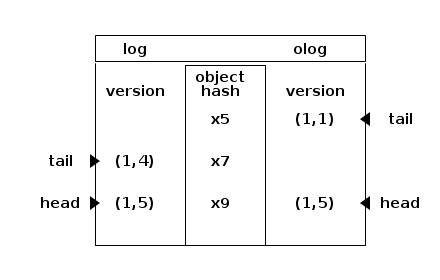

The column to the left shows the log entries. They are ordered, oldest first. For instance : 1,4 is epoch 1 version 4. The tail displayed to the left points to the oldest entry of the log. The head points to the most recent. The column to the right shows the olog : they are authoritative and will be merged into log which may imply to discard divergent entries. All log entries relate to an object which is represented by its hash in the column in the middle : x5, x7, x9.

The use cases implemented by PGLog::merge_log are demonstrated by individual test cases.

Continue reading “Merging Ceph placement group logs”

Ceph placement groups backfilling

Ceph stores objects in pools which are divided in placement groups.

+---------------------------- pool a ----+ |+----- placement group 1 -------------+ | ||+-------+ +-------+ | | |||object | |object | | | ||+-------+ +-------+ | | |+-------------------------------------+ | |+----- placement group 2 -------------+ | ||+-------+ +-------+ | | |||object | |object | ... | | ||+-------+ +-------+ | | |+-------------------------------------+ | | .... | | | +----------------------------------------+ +---------------------------- pool b ----+ |+----- placement group 1 -------------+ | ||+-------+ +-------+ | | |||object | |object | | | ||+-------+ +-------+ | | |+-------------------------------------+ | |+----- placement group 2 -------------+ | ||+-------+ +-------+ | | |||object | |object | ... | | ||+-------+ +-------+ | | |+-------------------------------------+ | | .... | | | +----------------------------------------+ ...

The placement group is supported by OSDs to store the objects. For instance objects from the placement group 1 of the pool a will be stored in files managed by an OSD on a designated disk. They are daemons running on machines where storage is available. For instance, a placement group supporting three replicates will have three OSDs at his disposal : one OSDs is the primary (OSD 0) and the other two store copies (OSD 1 and OSD 2).

+-------- placement group 1 ---------+

|+----------------+ +----------------+ |

|| object A | | object B | |

|+----------------+ +----------------+ |

+---+-------------+-----------+--------+

| | |

| | |

OSD 0 OSD 1 OSD 2

+------+ +------+ +------+

|+---+ | |+---+ | |+---+ |

|| A | | || A | | || A | |

|+---+ | |+---+ | |+---+ |

|+---+ | |+---+ | |+---+ |

|| B | | || B | | || B | |

|+---+ | |+---+ | |+---+ |

+------+ +------+ +------+

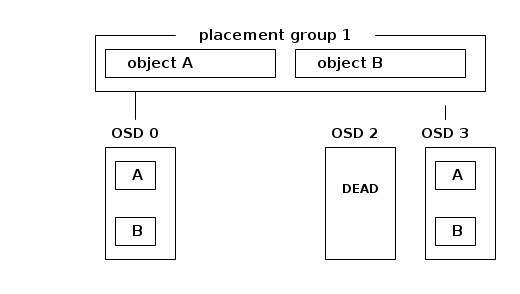

Whenever an OSD dies, the placement group information and the associated objects stored in this OSD are gone and need to be reconstructed using another OSD.

+-------- placement group 1 ---------+

|+----------------+ +----------------+ |

|| object A | | object B | |

|+----------------+ +----------------+ |

+---+-------------+-----------------+--+

| | |

| | |

OSD 0 OSD 1 OSD 2 OSD 3

+------+ +------+ +------+ +------+

|+---+ | |+---+ | | | |+---+ |

|| A | | || A | | | | || A | |

|+---+ | |+---+ | | DEAD | |+---+ |

|+---+ | |+---+ | | | |+---+ >----- last_backfill

|| B | | || B | | | | || B | |

|+---+ | |+---+ | | | |+---+ |

+------+ +------+ +------+ +------+

The objects from the primary ( OSD 0 ) are copied to OSD 3 : this is called backfilling. It involves the primary ( OSD 0) and the backfill peer ( OSD 3) scanning over their content and copying the objects which are different or missing from the primary to the backfill peer. Because this may take a long time, the last_backfill attribute is tracked for each local placement group copy (i.e. the placement group information that resides on OSD 3 ) indicating how far the local copy has been backfilled. In the case that the copy is complete, last_backfill is hobject_t::max().

OSD 3

+----------------+

|+--- object --+ |

|| name : B | |

|| key : 2 | |

|+-------------+ |

|+--- object --+ >----- last_backfill

|| name : A | |

|| key : 5 | |

|+-------------+ |

| |

| .... |

+----------------+

Object names are hashed into an integer that can be used to order them. For instance, the object B above has been hashed to key 2 and the object A above has been hashed to key 5. The last_backfill attribute of the placement group draws the limit separating the objects that have already been copied from other OSDs and those in the process of being copied. The objects that are lower than last_backfill have been copied ( that would be object B above ) and the objects that are greater than last_backfill are going to be copied.

Backfilling is expensive and placement groups do not exclusively rely on it to recover from failure. The placement groups logs their changes, for instance deleting an object or modifying an object. When and OSD is unavailable for a short period of time, it may be cheaper to replay the logs.

Installing ceph with ceph-deploy

A ceph-deploy package is created for Ubuntu raring and installed with

dpkg -i ceph-deploy_0.0.1-1_all.deb

A ssh key is generated without a password and copied over to the root .ssh/authorized_keys file of each host on which ceph-deploy will act:

# ssh-keygen Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: ca:1f:c3:ce:8d:7e:27:54:71:3b:d7:31:32:14:ba:68 root@bm0014.the.re The key's randomart image is: +--[ RSA 2048]----+ | .o. | | oo.o | | . oo.+| | . o o o| | SE o o | | . o. . | | o +. | | + =o . | | .*..o | +-----------------+ # for i in 12 14 15 do ssh bm00$i.the.re cat \>\> .ssh/authorized_keys < .ssh/id_rsa.pub done

Each host is installed with Ubuntu raring and has a spare, unused, disk at /dev/sdb. The ceph packages are installed with:

ceph-deploy install bm0012.the.re bm0014.the.re bm0015.the.re

The short version of each FQDN is added to /etc/hosts on each host, because ceph-deploy will assume that it exists:

for host in bm0012.the.re bm0014.the.re bm0015.the.re do getent hosts bm0012.the.re bm0014.the.re bm0015.the.re | \ sed -e 's/\.the\.re//' | ssh $host cat \>\> /etc/hosts done

The ceph cluster configuration is created with:

# ceph-deploy new bm0012.the.re bm0014.the.re bm0015.the.re

and the corresponding mon are deployed with

ceph-deploy mon create bm0012.the.re bm0014.the.re bm0015.the.re

Even after the command returns, it takes a few seconds for the keys to be generated on each host: the ceph-mon process shows when it is complete. Before creating the osd, the keys are obtained from a mon with:

ceph-deploy gatherkeys bm0012.the.re

The osds are then created with:

ceph-deploy osd create bm0012.the.re:/dev/sdb bm0014.the.re:/dev/sdb bm0015.the.re:/dev/sdb

After a few seconds the cluster stabilizes, as shown with

# ceph -s

health HEALTH_OK

monmap e1: 3 mons at {bm0012=188.165:6789/0,bm0014=188.165:6789/0,bm0015=188.165:6789/0}, election epoch 24, quorum 0,1,2 bm0012,bm0014,bm0015

osdmap e14: 3 osds: 3 up, 3 in

pgmap v106: 192 pgs: 192 active+clean; 0 bytes data, 118 MB used, 5583 GB / 5583 GB avail

mdsmap e1: 0/0/1 up

A 10GB RBD is created, mounted and destroyed with:

# rbd create --size 10240 test1 # rbd map test1 --pool rbd # mkfs.ext4 /dev/rbd/rbd/test1 # mount /dev/rbd/rbd/test1 /mnt # df -h /mnt Filesystem Size Used Avail Use% Mounted on /dev/rbd1 9.8G 23M 9.2G 1% /mnt # umount /mnt # rbd unmap /dev/rbd/rbd/test1 # rbd rm test1 Removing image: 100% complete...done.

ceph internals : buffer lists

The ceph buffers are used to process data in memory. For instance, when a FileStore handles an OP_WRITE transaction it writes a list of buffers to disk.

+---------+

| +-----+ |

list ptr | | | |

+----------+ +-----+ | | | |

| append_ >-------> >--------------------> | |

| buffer | +-----+ | | | |

+----------+ ptr | | | |

| _len | list +-----+ | | | |

+----------+ +------+ ,--->+ >-----> | |

| _buffers >----> >----- +-----+ | +-----+ |

+----------+ +----^-+ \ ptr | raw |

| last_p | / `-->+-----+ | +-----+ |

+--------+-+ / + >-----> | |

| ,- ,--->+-----+ | | | |

| / ,--- | | | |

| / ,--- | | | |

+-v--+-^--+--^+-------+ | | | |

| bl | ls | p | p_off >--------------->| | |

+----+----+-----+-----+ | +-----+ |

| | off >------------->| raw |

+---------------+-----+ | |

iterator +---------+

The actual data is stored in buffer::raw opaque objects. They are accessed through a buffer::ptr. A buffer::list is a sequential list of buffer::ptr which can be used as if it was a contiguous data area although it can be spread over many buffer::raw containers, as represented by the rectangle enclosing the two buffer::raw objects in the above drawing. The buffer::list::iterator can be used to walk each character of the buffer::list as follows:

bufferlist bl;

bl.append("ABC", 3);

{

bufferlist::iterator i(&bl);

++i;

EXPECT_EQ('B', *i);

}

Chaining extended attributes in ceph

Ceph uses extended file attributes to store file meta data. It is a list of key / value pairs. Some file systems implementations do not allow to store more than 2048 characters in the value associated with a key. To overcome this limitation Ceph implements chained extended attributes.

A value that is 5120 character long will be stored in three separate attributes:

- user.key : first 2048 characters

- user.key@1 : next 2048 characters

- user.key@2 : last 1024 characters

The proposed unit tests may be used as a documentation describing in detail how it is implemented from the caller point of view.

Continue reading “Chaining extended attributes in ceph”

unit testing ceph : the Throttle.cc example

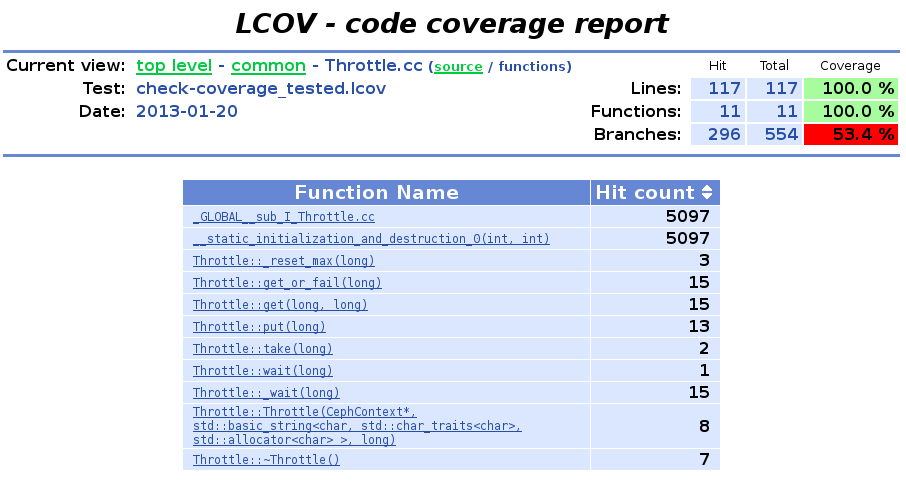

The throttle implementation for ceph can be unit tested using threads when it needs to block. The gtest framework produces coverage information to lcov showing that 100% of the lines of code are covered.

Continue reading “unit testing ceph : the Throttle.cc example”

ceph code coverage (part 2/2)

WARNING: teuthology has changed significantly, the instructions won’t work anymore.

When running ceph integration tests with teuthology, code coverage reports shows which lines of code were involved. Adding coverage: true to the integration task and using code compiled for code coverage instrumentation with flavor: gcov collects coverage data. lcov is then used

./virtualenv/bin/teuthology-coverage -v --html-output /tmp/html ...

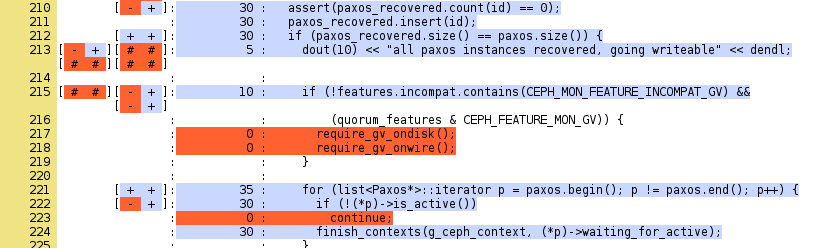

to create an HTML report. It shows that lines 217 and 218 of mon/Monitor.cc are not being used by the scenario.

ceph code coverage (part 1/2)

The ceph sources are compiled with code coverage enabled

root@ceph:/srv/ceph# ./configure --with-debug CFLAGS='-g' CXXFLAGS='-g' \ --enable-coverage \ --disable-silent-rules

and the tests are run

cd src ; make check-coverage

to create the HTML report which shows where tests could improve code coverage:

Continue reading “ceph code coverage (part 1/2)”