WARNING: teuthology has changed significantly, the instructions won’t work anymore.

When running ceph integration tests with teuthology, code coverage reports shows which lines of code were involved. Adding coverage: true to the integration task and using code compiled for code coverage instrumentation with flavor: gcov collects coverage data. lcov is then used

./virtualenv/bin/teuthology-coverage -v --html-output /tmp/html ...

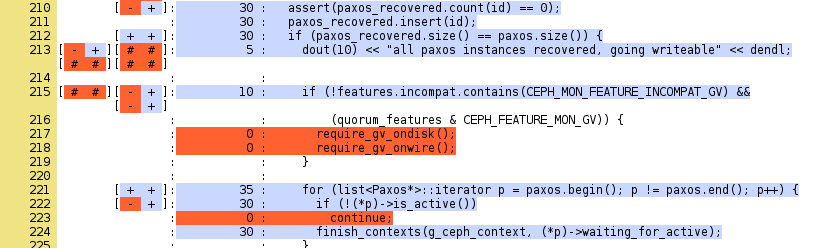

to create an HTML report. It shows that lines 217 and 218 of mon/Monitor.cc are not being used by the scenario.

creating instances and devices

Four machines are created in an OpenStack tenant, running Ubuntu Quantal. ceph will be driving the integration tests, teuthology1, teuthology2 and teuthology3 will be targets, i.e. doing the actual work and running the integration scripts.

nova boot --image 'Ubuntu quantal 12.10' \ --flavor e.1-cpu.10GB-disk.1GB-ram \ --key_name loic --availability_zone=bm0003 \ --poll teuthology1

teuthology[123] are given an additional 10G volume.

# euca-create-volume --zone bm0003 --size 10 # nova volume-list ... | 67 | available | None | 10 | ... +----+----------------+--------------+------+--... # nova volume-attach teuthology1 67 /dev/vdb

When running on the target instance, teuthology will explore a predefined list of devices ( wich has been extended to include /dev/vdb ), test their availability and use them for tests if possible.

configuring the instance running teuthology

The instance named ceph will run teuthology.

sudo apt-get install git-core git clone git://github.com/ceph/teuthology.git

The dependencies are installed and the command line scripts created with

sudo apt-get install python-dev python-virtualenv python-pip libevent-dev cd teuthology ; ./bootstrap

The requirements are light because this instance won’t run ceph. It will drive the other instances ( teuthology[123] ) and instruct them to run ceph integration scripts.

Teuthology expect the targets to have a password-less ssh connection. Although it could be done using ssh agent forwarding, the ssh client does not support it. A new password-less key is generated with ssh-keygen and the content of ~/.ssh/id_rsa.pub is copied over to the ~/.ssh/authorized_keys file for the ubuntu account on each target instance.

configuring teuthology targets

The targets will install packages as instructed by the instance ( see the – install: task description below ).

Ceph is sensitive to time differences between instances and ntp should be installed to ensure they are in sync.

apt-get install ntp

describing the integration tests

The integration tests are described in YAML files intepreted by teuthology. The following YAML file is used : it is a trivial example to make sure the environment is ready to host more complex tests suites.

check-locks: false roles: - - mon.a - mon.c - osd.0 - - mon.b - osd.1 tasks: - install: - ceph: branch: master coverage: true flavor: gcov fs: xfs targets: ubuntu@teuthology1: ssh-rsa AAAAB3NzaC1yc2... ubuntu@teuthology2: ssh-rsa AAAAB3NzaC1yc2... ubuntu@teuthology3: ssh-rsa AAAAB3NzaC1yc2...

The check-locks: false disables the target locking logic and assumes the environment is used by a single user, i.e. each developer deploys teuthology and the required targets within an OpenStack tenant of his own. The roles: section deploys a few osd and mon on the first two targets listed below. The first subsection of roles:

roles: - - mon.a - mon.c - osd.0

will be deployed on the first subsection of targets:

targets: ubuntu@teuthology1: ssh-rsa AAAAB3NzaC1yc2...

and so on. The – install: line needs to be first and will take care of installing the required packages on each target. The actual tests is the ceph task in the tasks section. It is required to run using binaries compiled with code coverage ( flavor: gcov ) and teuthology is instructed to collect the coverage information ( coverage: true ).

By default coverage.py will archive the coverage results in a MySQL database. This is disabled.

The targets are described with their user and domain name ( ubuntu@teuthology1 ) which can be tested with ssh ubuntu@teuthology1 echo good and must work without password. It must be followed by the ssh host key, as found in the /etc/ssh/ssh_host_rsa_key.pub file on each target machine. The comment part ( i.e. the root@teuthology1 that shows at the end of the /etc/ssh/ssh_host_rsa_key.pub file ) must be removed otherwise teuthology will be confused and complain it cannot parse the line.

running teuthology and collecting coverage

From the ceph instance, in the teuthology source directory and assuming ceph-coverage.yaml contains the description commented above:

# ./virtualenv/bin/teuthology --archive /tmp/a1 ceph-coverage.yaml INFO:teuthology.run_tasks:Running task internal.save_config... INFO:teuthology.task.internal:Saving configuration INFO:teuthology.run_tasks:Running task internal.check_lock... INFO:teuthology.task.internal:Lock checking disabled. INFO:teuthology.run_tasks:Running task internal.connect... INFO:teuthology.task.internal:Opening connections... INFO:teuthology.task.internal:roles: ubuntu@teuthology1 - ['mon.a', 'mon.c', 'osd.0'] ... INFO:teuthology.orchestra.run.out:kernel.core_pattern = core INFO:teuthology.task.internal:Transferring archived files... INFO:teuthology.task.internal:Removing archive directory... INFO:teuthology.task.internal:Tidying up after the test...

Coverage analysis will expect a tarbal containing the binaries used to run the tests on the local file system:

mkdir /tmp/build wget -O /tmp/build/tmp.tgz http://gitbuilder.ceph.com/ceph-tarball-precise-x86_64-gcov/sha1/$(cat /tmp/a1/ceph-sha1)/ceph.x86_64.tgz

The global configuration file for teuthology must be informed of the location of the tarbal with:

echo ceph_build_output_dir: /tmp/build >> ~/.teuthology.yaml

Note that the tarbal must be named after the directory containing the a1 directory. The general idea is that this directory is going to be used to collect multiple results of teuthology runs that use the same set of binaries.

The coverage HTML report is created with:

# ./virtualenv/bin/teuthology-coverage -v --html-output /tmp/html \ --lcov-output /tmp/lcov \ --cov-tools-dir $(pwd)/coverage \ /tmp INFO:teuthology.coverage:initializing coverage data... Retrieving source and .gcno files... Initializing lcov files... Deleting all .da files in /tmp/lcov/ceph/src and subdirectories Done. Capturing coverage data from /tmp/lcov/ceph/src Found gcov version: 4.6.3 Scanning /tmp/lcov/ceph/src for .gcno files ... Found 692 graph files in /tmp/lcov/ceph/src Processing src/libcommon_la-AuthUnknownAuthorizeHandler.gcno Processing src/librbd_la-internal.gcno ... Overall coverage rate: lines......: 19.6% (26313 of 133953 lines) functions..: 16.6% (3305 of 19954 functions) branches...: 10.4% (18592 of 178722 branches)

It will browse the /tmp directory and run on each directory it finds that contains a summary.yaml file and a ceph-sha1.

troubleshooting

If the teuthology fails on a target, it may be necessary to manually remove the left-overs with:

rm -fr /tmp/cephtest umount /tmp/cephtest/data/osd.0.data

It is required ( for lcov to work ) that the toolchain used to compile the ceph sources match, both on the machine running teuthology and on the machine used to collect the coverage result. Otherwise lcov may fail.

github may fail to deliver the source tarbal matching a commit. cov-init.sh relies on this source tarbal to match the coverage data with the corresponding source code:

wget -q -O- "https://github.com/ceph/ceph/tarball/$SHA1" | \ tar xzf - --strip-components=1 -C $OUTPUT_DIR/ceph

If it fails

gzip: stdin: unexpected end of file tar: Child returned status 1 tar: Error is not recoverable: exiting now ERROR:teuthology.coverage:error generating coverage

the best workaround is to try again.