A snapshot of an Essex OpenStack instance contains an AMI ext3 file system. It is rsync’ed to a partitioned volume in the Havana cluster. After installing grub from chroot, a new instance can be booted from the volume.

Continue reading “HOWTO migrate an AMI from Essex to a bootable volume on Havana”

HOWTO install Ceph teuthology on OpenStack

Teuthology is used to run Ceph integration tests. It is installed from sources and will use newly created OpenStack instances as targets:

$ cat targets.yaml targets: ubuntu@target1.novalocal: ssh-rsa AAAAB3NzaC1yc2... ubuntu@target2.novalocal: ssh-rsa AAAAB3NzaC1yc2...

They allow password free ubuntu ssh connection with full sudo privileges from the machine running teuthology. A Ubuntu precise 12.04.2 target must be configured with:

$ wget -q -O- 'https://ceph.com/git/?p=ceph.git;a=blob_plain;f=keys/release.asc' | \ sudo apt-key add - $ echo ' ubuntu hard nofile 16384' > /etc/security/limits.d/ubuntu.conf

It can then be tried with a configuration file that does nothing but install Ceph and run the daemons.

$ cat noop.yaml check-locks: false roles: - - mon.a - osd.0 - - osd.1 - client.0 tasks: - install: project: ceph branch: stable - ceph:

The output should look like this:

$ ./virtualenv/bin/teuthology targets.yaml noop.yaml

INFO:teuthology.run_tasks:Running task internal.save_config...

INFO:teuthology.task.internal:Saving configuration

INFO:teuthology.run_tasks:Running task internal.check_lock...

INFO:teuthology.task.internal:Lock checking disabled.

INFO:teuthology.run_tasks:Running task internal.connect...

INFO:teuthology.task.internal:Opening connections...

DEBUG:teuthology.task.internal:connecting to ubuntu@teuthology2.novalocal

DEBUG:teuthology.task.internal:connecting to ubuntu@teuthology1.novalocal

...

INFO:teuthology.run:Summary data:

{duration: 363.5891010761261, flavor: basic, owner: ubuntu@teuthology, success: true}

INFO:teuthology.run:pass

Continue reading “HOWTO install Ceph teuthology on OpenStack”

Virtualizing legacy hardware in OpenStack

A five years old hardware is being decommissioned and hosts fourteen vservers on a Debian GNU/Linux lenny running a 2.6.26-2-vserver-686-bigmem linux kernel. The April non profit relies on these services (mediawiki, pad, mumble, etc. ) for the benefit of its 5,000 members and many working groups. Instead of migrating each vserver individually to an OpenStack instance, it was decided that the vserver host would be copied over to an OpenStack instance.

The old hardware has 8GB of RAM, 150GB disk and a dual Xeon totaling 8 cores. The munin statistics show that no additional memory is needed, the disk is half full and an average of one core is used at all times. A 8GB RAM, 150GB disk and dual core openstack instance is prepared. The instance will be booted from a 150GB volume placed on the same hardware to get maximum disk I/O speed.

After the volume is created, it is mounted from the OpenStack node and the disk of the old machine is rsync’ed to it. It is then booted after modifying a few files such as fstab. The OpenStack node is in the same rack and the same switch as the old hardware. The IP is removed from the interface of the old hardware and it is bound to the OpenStack instance. Because it is running on nova-network with multi-host activated, it is bound to the interface of the OpenStack node which can take over immediately. The public interface of the node is set as an ARP proxy to advertise the bridge where the instance is connected. The security group of the instance are disabled ( by opening all protocols and ports ) because a firewall is running in the instance.

Continue reading “Virtualizing legacy hardware in OpenStack”

Disaster recovery on host failure in OpenStack

The host bm0002.the.re becomes unavailable because of a partial disk failure on an Essex based OpenStack cluster using LVM based volumes and multi-host nova-network. The host had daily backups using rsync / and each LV was copied and compressed. Although the disk is failing badly, the host is not down and some reads can still be done. The nova services are shutdown, the host disabled using nova-manage and an attempt is made to recover from partially damaged disks and LV, when it leads to better results than reverting to yesterday’s backup.

Continue reading “Disaster recovery on host failure in OpenStack”

ceph code coverage (part 2/2)

WARNING: teuthology has changed significantly, the instructions won’t work anymore.

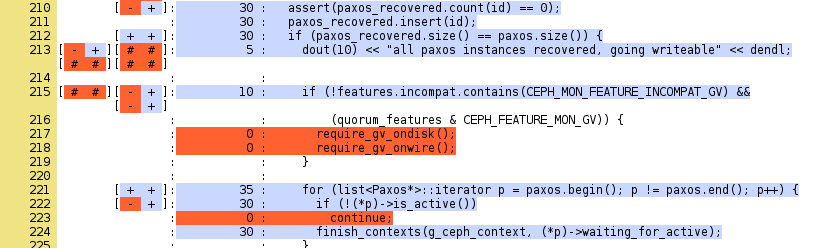

When running ceph integration tests with teuthology, code coverage reports shows which lines of code were involved. Adding coverage: true to the integration task and using code compiled for code coverage instrumentation with flavor: gcov collects coverage data. lcov is then used

./virtualenv/bin/teuthology-coverage -v --html-output /tmp/html ...

to create an HTML report. It shows that lines 217 and 218 of mon/Monitor.cc are not being used by the scenario.

anatomy of an OpenStack based integration test for a backuppc puppet module

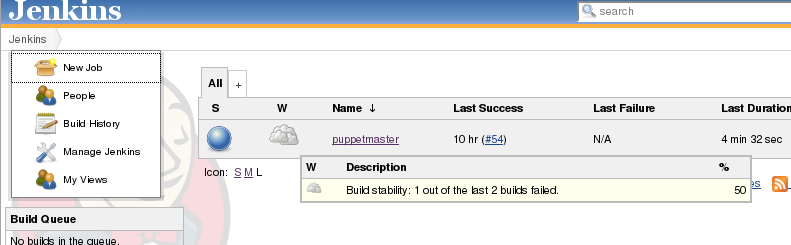

An integration test is run by jenkins within an OpenStack tenant. It checks that the backuppc puppet module is installed

ssh root@$instance test -f /etc/backuppc/hosts || return 3

A full backup is run

ssh root@$instance su -c '"/usr/share/backuppc/bin/BackupPC_serverMesg \

backup nagios.novalocal nagios.novalocal backuppc 1"' \

backuppc || return 4

ssh root@$instance tail -f /var/lib/backuppc/pc/nagios.novalocal/LOG.* | \

sed --unbuffered -e "s/^/$instance: /" -e '/full backup 0 complete/q'

and a nagios plugin asserts its status is monitored

while ! ( echo "GET services"

echo "Filter: host_alias = $instance.novalocal"

echo "Filter: check_command = check_nrpe_1arg"'!'"check_backuppc" ) |

ssh root@nagios unixcat /var/lib/nagios3/rw/live |

grep "BACKUPPC OK - (0/" ; do

sleep 1

done

Continue reading “anatomy of an OpenStack based integration test for a backuppc puppet module”

nagios puppet module for the April infrastructure

This document explains the nagios configuration for the infrastructure of the April non profit organisation.

It is used to configure the nagios server overseeing all the services. The nagios plugins that cannot be run from the server ( such as check_oom_killer ) are installed locally and connected to nagios with nrpe. All services are bound to private IPs within the 192.168.0.0/16 network and exposed to the nagios server ( using OpenVPN to connect bare metal machines together ) and the firewalls are set to allow TCP on the nrpe port ( 5666 ).

Continue reading “nagios puppet module for the April infrastructure”

Migrating OpenVZ virtual machines to OpenStack

A OpenVZ cluster hosts GNU/Linux based virtual machines. The disk is extracted with rsync and uploaded to the glance OpenStack image service with glance add … disk_format=ami…. It is associated with a kernel image compatible with both OpenStack and the existing file system with glance update … kernel_id=0dfff976-1f55-4184-954c-a111f4a28eef ramdisk_id=aa87c84c-d3be-41d0-a272-0b4a85801a34 ….

Continue reading “Migrating OpenVZ virtual machines to OpenStack”

realistic puppet tests with jenkins and OpenStack (part 2/2)

The April infrastructure uses puppet manifests stored in a git repository. On each commit, a jenkins job is run and it performs realistic tests in a dedicated OpenStack tenant.

If the test is successfull, jenkins pushes the commit to the production branch. The production machines can then pull from it:

root@puppet:/srv/admins# git pull Updating 5efbe80..cf59d69 Fast-forward .gitmodules | 6 +++ jenkins/openstack-test.sh | 53 +++++++++++++++++++++++++++ jenkins/run-test-in-openstack.sh | 215 +++++++++++++++++++++++++++ puppetmaster/manifests/site.pp | 43 ++++++++++++++++++++-- puppetmaster/modules/apt | 1 + 6 files changed, 315 insertions(+), 165 deletions(-) create mode 100755 jenkins/openstack-test.sh create mode 100644 jenkins/run-test-in-openstack.sh create mode 160000 puppetmaster/modules/apt root@puppet:/srv/admins# git branch -v master 5efbe80 [behind 19] ajout du support nagios, configuration .... refs #1053 * production cf59d69 Set the nagios password for debugging ...

Continue reading “realistic puppet tests with jenkins and OpenStack (part 2/2)”

routing ipv6 from Hetzner to an OpenStack instance

The 2a01:4f8:162:12e3::2 IPv6 address is assigned to http://packaging-farm.dachary.org/ from the IPv6 subnet provided by Hetzner. The OpenStack host on which the instance running packaging-farm.dachary.org is running is configured as a proxy with

sysctl -w net.ipv6.conf.all.proxy_ndp=1 ip -6 neigh add proxy 2a01:4f8:162:12e3::2 dev eth0

and an OpenStack Essex bug is worked around by manually disabling hairpin_mode:

echo 0 > /sys/class/net/br2003/brif/vnet1/hairpin_mode

The page can then be retrieved with

$ curl --verbose -6 http://packaging-farm.dachary.org/ * About to connect() to packaging-farm.dachary.org port 80 (#0) * Trying 2a01:4f8:162:12e3::2... * connected * Connected to packaging-farm.dachary.org (2a01:4f8:162:12e3::2) port 80 (#0) > Host: packaging-farm.dachary.org > Accept: */* > ... <address>Apache/2.2.19 (Debian) Server at packaging-farm.dachary.org Port 80</address> </body></html> * Connection #0 to host packaging-farm.dachary.org left intact * Closing connection #0

Continue reading “routing ipv6 from Hetzner to an OpenStack instance”