The automated make check for Ceph bot runs on Ceph pull requests. It is still experimental and will not be triggered by all pull requests yet.

It does the following:

- Create a docker container (using ceph-test-helper.sh)

- Checkout the merge of the pull request against the destination branch (tested on master, next, giant, firefly)

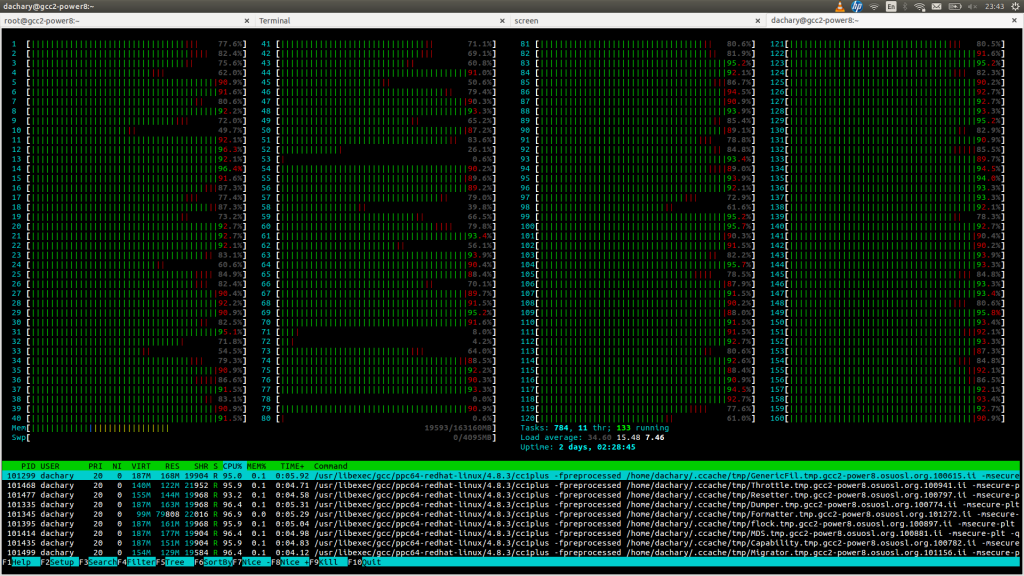

- Execute run-make-check.sh

- Add a comment to the pull request with a link to the full output of run-make-check.sh.

A use case for developers is:

- write a patch and send a pull request

- switch to another branch and work on another patch while the bot is running

- if the bot reports failure, switch back to the original branch and repush a fix: the bot will notice the repush and run again

It also helps reviewers who can wait until the bot succeeds before looking at the patch closely.

Continue reading “A make check bot for Ceph contributors”