Check the comments for updates

The the.re OpenStack cluster is made of machines hosted in various places. When it is rented from Hetzner, it must be configured with Debian GNU/Linux wheezy and partitionned with a large LVM volume group for storage. It is given the name bm0005.the.re. It is then configured with the compute role as instructed in the puppet HOWTO. A new tenant is created, with quotas corresponding to the resources provided by the hardware ( in this case an EX10 model with 64GB RAM, 12 3.20GHz cores, 6TB disk ).

Hetzner configuration instructions

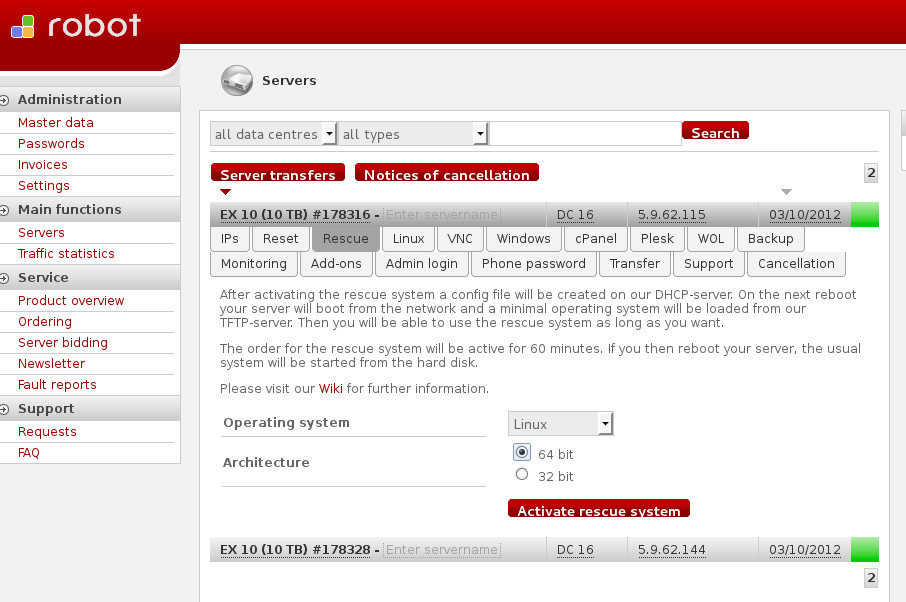

Once the server is created, go to the server entry of the control panel and select the rescue as displayed below.

Chose 64bits and click Activate rescue system. It will display the root password : save it. Click on the Reset, click Send CTRL+ALT+DEL to the server and then Send as shown below:

After a few seconds it is possible to ssh:

ssh root@bm0005.the.re

Following the installimage instructions, in the first menu chose Debian. In the second menu chose Debian-60-squeeze-64-minimal. When in the text editor, chose the following.

Deactivate the software RAID

SWRAID 0

Set the hostname

HOSTNAME bm0005.the.re

Comment out

PART /home ext4 all

Reduce the size of the root file system

PART / ext4 20G

Save and quit. When the script is done, reboot with:

reboot

configure LVM

The remaining disk space is used to create a nova-volumes volume group. This name is required so that nova-volume knows where to allocate lv.

# parted /dev/sdb (parted) mklabel gpt (parted) mkpart primary 0 3001GB (parted) p Model: ATA ST3000DM001-1CH1 (scsi) Disk /dev/sdb: 3001GB Sector size (logical/physical): 512B/4096B Partition Table: gpt Number Start End Size File system Name Flags 1 17.4kB 3001GB 3001GB primary

and on the first disk

# parted /dev/sda (parted) mkpart primary 56.4GB 3001GB (parted) p Model: ATA ST3000DM001-1CH1 (scsi) Disk /dev/sda: 3001GB Sector size (logical/physical): 512B/4096B Partition Table: gpt Number Start End Size File system Name Flags 4 1049kB 2097kB 1049kB bios_grub 1 2097kB 34.4GB 34.4GB linux-swap(v1) 2 34.4GB 34.9GB 537MB ext3 3 34.9GB 56.4GB 21.5GB ext4 5 56.4GB 3001GB 2944GB primary

Format the partitions as physical volumes for LVM:

# pvcreate /dev/sdb1 # pvcreate /dev/sda5 # pvs PV VG Fmt Attr PSize PFree /dev/sda5 nova-volumes lvm2 a-- 2.68t 2.68t /dev/sdb1 nova-volumes lvm2 a-- 2.73t 2.73t

Create a volume group with these physical volumes:

# vgcreate nova-volumes /dev/sda5 /dev/sdb1 # vgs VG #PV #LV #SN Attr VSize VFree nova-volumes 2 0 0 wz--n- 5.41t 5.41t

requesting more IP

A sketchfab pool is created to be used by the corresponding tenant only and control which IP is allocated without risking that it is accidentally allocated by another tenant.

# nova-manage floating create --pool=sketchfab --ip_range=5.9.62.155 --interface=eth0

Note that 5.9.62.155 must be used instead of 5.9.62.155/32 otherwise the command fails.

# nova floating-ip-pool-list +-----------+ | name | +-----------+ | nova | | sketchfab | +-----------+

Allocate the IP to the tenant so that it is not used by another tenant: all pools are available to all tenants.

# nova floating-ip-create sketchfab +------------+-------------+----------+-----------+ | Ip | Instance Id | Fixed Ip | Pool | +------------+-------------+----------+-----------+ | 5.9.62.155 | None | None | sketchfab | +------------+-------------+----------+-----------+

creating more networks

The sample puppet manifest only created one network. It was fixed and additional networks for the.re were created as follows to prevent a shortage:

for ip in $(seq 2 200) ; do

nova-manage network create --label=novanetwork \

--fixed_range_v4=10.145.$ip.0/24 \

--vlan=$(expr 2000 + $ip)

done

puppetize the machine

The instructions at the puppet OpenStack HOWTO must then be followed to configure bm0005.the.re so that the puppet agent gives it the role of a compute node.

Check on the controller node that all the services expected to run on bm0005.the.re are active ( i.e. there is a smiley instead of XXX ):

~# nova-manage service list | grep bm0005 nova-network bm0005.the.re bm0005 enabled :-) 2012-09-18 18:29:14 nova-volume bm0005.the.re bm0005 enabled :-) 2012-09-18 18:29:07 nova-compute bm0005.the.re bm0005 enabled :-) 2012-09-18 18:29:10

The machine can now be used to allocate instances and volumes.

creating an OpenStack control machine

To ensure that the command line environment used to control OpenStack are stable, a virtual machine is created for the sole purpose of running them. In the following, all operations are assumed to be run from this control machine.

# nova flavor-list | grep 7 | 7 | e.1-cpu.10GB-disk.512MB-ram | 512 | 10 | 0 | | 1 | 1.0 | # # nova image-list | grep core-12 | c620dd0c-2313-43ae-8e87-47d1457a3cec | core-12.0 | ACTIVE | | # nova boot --image c620dd0c-2313-43ae-8e87-47d1457a3cec --flavor 7 \ --key_name cedric --availability_zone=bm0005 control --poll

It is upgrade to wheezy with

# echo deb http://ftp.debian.org/debian wheezy main > /etc/apt/sources.list.d/wheezy.list # apt-get update

The euca2ools and nova-pythonclient are installed

# apt-get install euca2ools nova-pythonclient

In the following, the instructions to download the appropriate EC2 or OpenStack credentials are finalized by adding

source ec2rc.sh source openrc.sh

to ~/.bashrc so that they are evaluated at login time and all commands are ready to use.

creating a virtual machine

Login to the the.re dashboard, click on the settings link ( top right ) then click on the OpenStack Credentials menu entry on the left and click on the Download RC File button. Save the downloaded file as openrc.sh.

Make sure the python-novaclient package is installed:

# apt-get install python-novaclient

Run the file that was just downloaded from a terminal window:

# source openrc.sh

It will prompt for a password : this is the same password used to login the dashboard. It defines four environment variables which are used by the nova command.

# env | grep OS OS_TENANT_ID=c776fbcb77374ec7ae4cafb2a6d13402 OS_PASSWORD=mypassword OS_AUTH_URL=http://os.the.re:5000/v2.0 OS_USERNAME=cedric.pinson OS_TENANT_NAME=sketchfab

The machine instance can now be created. First chose what operating system to use. turnkeylinux core is a Debian GNU/Linux squeeze adapted to OpenStack and already available in the.re.

# nova image-show core-12.0 +---------------------+--------------------------------------+ | Property | Value | +---------------------+--------------------------------------+ | created | 2012-09-17T12:45:12Z | | id | c620dd0c-2313-43ae-8e87-47d1457a3cec | | metadata kernel_id | c2e89017-f496-497b-b5e3-b22b13e40ad0 | | metadata ramdisk_id | 3e393bd0-4982-46e9-8c92-6c1609d19221 | | minDisk | 0 | | minRam | 0 | | name | core-12.0 | | progress | 100 | | status | ACTIVE | | updated | 2012-09-17T12:45:19Z | +---------------------+--------------------------------------+

A machine flavor with a large amount of memory was created by the administrator:

# nova flavor-create e.6-cpu.10GB-disk.60GB-ram 10 61440 10 6 +----+----------------------------+-----------+------+-----------+------+-------+-------------+ | ID | Name | Memory_MB | Disk | Ephemeral | Swap | VCPUs | RXTX_Factor | +----+----------------------------+-----------+------+-----------+------+-------+-------------+ | 10 | e.6-cpu.10GB-disk.60GB-ram | 61440 | 10 | 0 | | 6 | 1 | +----+----------------------------+-----------+------+-----------+------+-------+-------------+

The default RAM quota ( 50GB ) was raised to 128GB :

# keystone tenant-list | grep sketchfab | e102e816ce6a4b29a028afe00906c87d | sketchfab | True | # nova-manage project quota --project=e102e816ce6a4b29a028afe00906c87d --key=ram --value=$(expr 128 \* 1024)

The ssh public key of the primary tenant user is added with:

# cat > /tmp/cedric.pub ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAwb...3OObxv84jv9Uw== mornifle@macmornifle # nova keypair-add cedric --pub_key /tmp/cedric.pub

And finally create the virtual machine:

# nova boot --image c620dd0c-2313-43ae-8e87-47d1457a3cec \ --flavor 10 --key_name cedric --availability_zone=bm0005 storage --poll

creating a volume

Follow the instructions in the creating a virtual machine chapter to download credentials but this time get the EC2 credentials instead of the OpenStack credentials. It is necessary to use the euca-create-volume command that allows to control where the volume is allocated. Unzip downloaded .zip file into a directory and setup the environment:

# euca-create-volume --zone bm0005 --size 500

After a few seconds:

# euca-describe-volumes VOLUME vol-00000026 500 bm0005 available 2012-09-19T08:08:47.000Z

which also shows using OpenStack commands:

# nova volume-list +----+-----------+--------------+------+-------------+-------------+ | ID | Status | Display Name | Size | Volume Type | Attached to | +----+-----------+--------------+------+-------------+-------------+ | 38 | available | None | 500 | None | | +----+-----------+--------------+------+-------------+-------------+

The volume is attached to the machine with:

root@core ~# nova volume-list +----+-----------+--------------+------+-------------+-------------+ | ID | Status | Display Name | Size | Volume Type | Attached to | +----+-----------+--------------+------+-------------+-------------+ | 38 | available | None | 500 | None | | +----+-----------+--------------+------+-------------+-------------+ root@core ~# nova list +--------------------------------------+---------+--------+----------------+ | ID | Name | Status | Networks | +--------------------------------------+---------+--------+----------------+ | 50b8a9ac-58a5-4743-a763-47e8e1f4901a | control | ACTIVE | one=10.145.1.5 | | f67c2eb9-9863-44c0-addf-1338233d7b4c | storage | ACTIVE | one=10.145.1.3 | +--------------------------------------+---------+--------+----------------+ root@core ~# nova volume-attach f67c2eb9-9863-44c0-addf-1338233d7b4c 38 /dev/vdb root@core ~# nova volume-list +----+--------+--------------+------+-------------+--------------------------------------+ | ID | Status | Display Name | Size | Volume Type | Attached to | +----+--------+--------------+------+-------------+--------------------------------------+ | 38 | in-use | None | 500 | None | f67c2eb9-9863-44c0-addf-1338233d7b4c | +----+--------+--------------+------+-------------+--------------------------------------+

And it shows from within the sketchfab virtual machine as follows:

# fdisk /dev/vdb Command (m for help): p Disk /dev/vdb: 536.9 GB, 536870912000 bytes 16 heads, 63 sectors/track, 1040253 cylinders Units = cylinders of 1008 * 512 = 516096 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0xfa80a0e6 Device Boot Start End Blocks Id System Command (m for help):

Hello Loic,

This is a great article that gives a real comprehensive coverage of the steps required. Thanks a million for taking the time to share such useful information. I suspect this guide may not be for openstack grizzly or havana. So, any chance of an update?

Also, be aware that where a Hetzner server has been pe-equipped with software raided drives, installimage does not always manage its removal well and without incidence.

The primary concern is that the removal of mdadm by the installimage is not complete because installimage only disables the mdadm services and raid volumes from coming up, it does not destroy the raid volumes .. so, we are left with mdadm raid volumes in degraded state. Now, there is a default mdadm configuration that will stop the system from booting if a raid volume is in a degraded state, and this will make the system drop into an initramfs shell during the next boot.

To guard against this possibility, at a minimum, it is probably advisable to disable the above mdadm behaviour before starting with your procedure suggested here. This can be done via ‘dpkg-reconfigure mdadm’ ..

So, perhaps the guide could be amended to reflect this. Perhaps, with your guidance, I can attempt to update the guide in a new post, using openstack grizzly and perhaps ubuntu 12.04 as examples ..

Thanks again for an excellent guide .. there is nothing similar out there in websphere ..

HI Chux,

This guide is indeed for OpenStack Essex and is outdated. I will update it to add a warning so people read your comment before proceeding. The drawback of blog posts is their planned obsolescence 🙂

Cheers

Planned obsolescence indeed 🙂 .. But that can also be seen as an archival or historical feature ..